Get New Site Pages Indexed Quickly: Maximizing Visibility

Accelerate Indexing and Elevate Your New Site’s Presence on Google In an era where millions of websites vie for attention in Google search results, understanding and leveraging […]

Accelerate Indexing and Elevate Your New Site’s Presence on Google

In an era where millions of websites vie for attention in Google search results, understanding and leveraging the nuances of search engine indexing becomes pivotal for any site owner or developer.

But what does it truly take for a new website to not only capture the discerning eye of web crawlers like Googlebot but to secure its place in the ever-expanding web index?

With a focus on tried-and-true SEO strategies and the advanced capabilities of LinkGraph’s SEO services and Search Atlas tool, site owners can enhance their index status, improve indexing speed, and rise above competitors in SERP rankings.

Mastering these elements is not just about attracting visitors; it’s about making a lasting impact on the digital stage.

Keep reading to unlock the secrets of swift indexing and optimal search engine presence.

Key Takeaways

- Effective Crawling and Indexing Are Crucial for Website Visibility in Google Search Results

- Creating High-Quality, Original Content Is Essential for Attracting Search Engines and Satisfying User Queries

- LinkGraph’s Search Atlas SEO Tool Helps Site Owners Optimize Their Content for Better Indexing and Visibility

- Proper Use of Google Search Console’s URL Inspection Tool Can Expedite New Content’s Inclusion in Search Results

- Continuous on-Page and Technical SEO Enhancements Are Key to Maintaining and Improving a Site’s Search Engine Rankings

Understanding the Basics of Google’s Indexing

Delving into the digital landscape, it becomes evident that the cornerstones of a website’s visibility hinge on two fundamental processes: crawling and indexing.

These mechanisms not only anchor a site within the search engine’s gaze but also play pivotal roles in positioning web pages for maximum exposure among eager searchers.

Quality content stands out as a beacon to Google’s crawlers, signaling a territory ripe for exploration and subsequent inclusion in the index.

For site owners, understanding the symbiotic relationship between a crawler’s discerning journey through web content, the index status it bequeaths upon a site, and the ensuing influence on the SERP ranking, marks the beginning of a strategic conquest for online prominence.

Defining Crawling, Indexing, and How They Affect Your Site

In the sphere of digital real estate, the term ‘crawling’ refers to the method web crawlers, such as Googlebot, use to navigate through the myriad of pages on the internet. These digital explorers methodically visit web pages, following each link they encounter to uncover new content and updates.

Upon discovering these digital territories, the process of indexing commences, wherein the search engine adds the freshly crawled content to its vast repository, deemed the search engine index. This curation makes the content searchable for users, directly affecting a site’s presence in the sea of search results.

| Process | Purpose | Impact on Site |

|---|---|---|

| Crawling | To discover and revisit web pages | Identification and retrieval of content |

| Indexing | To add content to search engine index | Boosts search visibility and accessibility |

The Impact of Quality Content on Google’s Crawling Behavior

The interplay between high-caliber content and the efficiency of web crawlers is a sublime dance observed across the digital expanse. Notably, a crafted articulation resonating with pertinence and quality beckons Google’s sophisticated algorithms, enhancing a website’s likelihood of being meticulously indexed.

A website that emanates expertise, authoritativeness, and trustworthiness – commonly abbreviated as E-A-T – significantly magnetizes Googlebot’s attention. Serving content with substance not only satiates the appetite of web crawlers but assures a more frequent scrutiny by these digital surveyors:

- Expertise: Communicates to search engines the author’s depth of knowledge on the subject.

- Authoritativeness: Establishes the site or author as a leading voice within their niche.

- Trustworthiness: Ensures the reliability and credibility of the content and the site as a whole.

Why Indexing Is Crucial for Visibility in Google Search Results

The crux of a website’s visibility in Google search results lies in its index status, a benchmark of digital relevance. When a site is indexed, its pages become eligible to appear in search results, creating conduits through which potential visitors can discover and engage with the content.

Without indexing, a site remains virtually invisible to the searcher’s query, unable to contribute value or compete for ranking in the search engine results pages. Indexing, therefore, is not just a process; it is the gateway for a website’s content to reach its intended audience and fulfill its online potential.

How Ranking Factors Into the Equation of Online Visibility

Ranking within Google’s search results is a dynamic competition, with each web page vying for the searcher’s attention amidst an ocean of information. The prominence a site achieves in these rankings is governed by a multitude of factors, including the strategic employment of keywords, the quality of content, and a robust backlink profile. These elements collectively determine the ease with which visitors locate a site, directly impacting the site’s online visibility and potential for audience engagement.

A site that successfully traverses the intricacies of search engine optimization (SEO) signals its relevance to Google’s sophisticated algorithms, subsequently securing a coveted position in the search engine results pages (SERPs). This ascent in rankings is critical, as it not only enhances the probability of garnering significant visitor traffic but also lays down a marker of credibility in a realm where the prominence of a landing page can make or break a site’s ability to capture and retain user interest.

Crafting Unique Content That Google Loves to Index

In the digital era where content saturation often blurs the lines of originality, the endeavor to craft content that captivates Google’s indexing algorithms becomes an ambitious pursuit for site owners.

Fresh, engaging content not only stands as the bedrock for a solid SEO foundation but reigns as a critical determinant in a site’s command of Google’s attention.

As the digital marketplace evolves, it is incumbent upon content creators to pioneer novel concepts and utilize cutting-edge strategies to distinguish their offerings from the vast swathes of web pages.

Embracing analytics as a compass, creators can navigate the labyrinth of content creation with insight, steering their efforts towards distinctive content that resonates with both search engines and their intended audience.

The Importance of Originality in Content Creation

Amidst the clamor of an ever-expanding digital universe, the imperative for originality in content creation cannot be overstated. A fresh perspective imbues a site with the unique voice necessary to catch the discerning eye of Google’s algorithms as well as the imagination of its prospective audience.

Where imitative content fades into the background, original material soars, bolstering the prospects of Google’s indexing and amplifying a site’s visibility. This authentic approach to content is a cornerstone of innovation, carving out a distinctive niche in the bustling online marketplace.

Strategies to Ensure Your Content Stands Out

To ensure content not only garners attention but also retains it, site owners must prioritize the creation of compelling and informative material. Integrating a mix of rich media, including images, videos, and infographics, can dramatically enhance reader engagement, thereby signaling to Google the value and relevance of the web page.

Incorporating timely, well-researched topics that address the needs and curiosities of the target audience sets the groundwork for content that stands out:

- Identify emerging trends and generate content that provides thought leadership and insights.

- Engage directly with the audience through surveys or comments to uncover the questions and issues that matter most to them.

- Employ a consistent, identifiable voice throughout blog posts and product pages to build a cohesive brand narrative.

Furthermore, by optimizing the website structure and making strategic use of internal links, site owners can guide web crawlers through their site efficiently, aiding in the indexing process and contributing to the visibility of their pages in search results. Each web page should be intuitively linked to ensure a seamless navigation experience for users and crawlers alike.

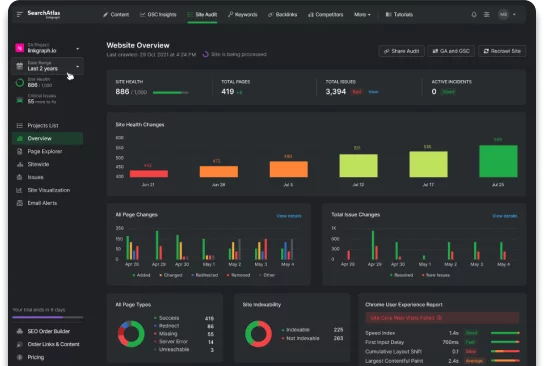

Using Analytics to Guide Unique Content Development

LinkGraph’s Search Atlas SEO tool empowers site owners to harness the analytical power necessary to sculpt content that aligns with search engine preferences. By scrutinizing user behavior data and performance metrics, owners gain invaluable insights, allowing them to fine-tune their content for optimal impact and indexing speed.

Armed with these analytical insights, content creators can pinpoint the exact topics and angles that resonate with their audience, ensuring that each blog post or product page not only captivates but also caters to the evolving demands of searchers and search engines alike. This strategic approach facilitates the development of genuinely unique content that stands a greater chance of being swiftly indexed and prominently displayed in Google search results.

Keeping Content Fresh With Regular Updates

The digital realm is in a perpetual state of flux, with the freshness of site content playing a pivotal role in its visibility and rank in Google’s search results.

To maintain and enhance a site’s presence within this dynamic landscape, a steadfast commitment to regular content updates is critical.

This involves laying out a structured content calendar for systematic posting, refining existing material through content re-optimization to preserve its relevance, and integrating user-generated insights to elevate the value of the content offered.

Together, these practices compose a symphony of strategies that, when skillfully executed, can expedite indexing and amplify a site’s resonance with both web crawlers and users.

Establishing a Content Calendar for Consistent Posting

The blueprint for sustained online visibility underscores the necessity of a content calendar, serving as the strategic framework for regular posting. A meticulous schedule drives consistency, ensuring that visitors and search engines alike are greeted with fresh, engaging content, fostering both enhanced Google indexation and user retention.

Initiating this cadence guides the site owner in orchestrating content releases that harmonize with market trends and user interests: a proactive step in not only captivating an audience but also feeding the ever-voracious search engine crawlers with index-ready material.

- Craft a timeline that charts content publication, integrating seasonal topics and industry events for heightened relevance.

- Develop a repertoire of content types, from in-depth guides to interactive product pages, to diversify visitor experiences and indexing opportunities.

- Allocate resources effectively, aligning article release with peak engagement times to maximize exposure and crawl budget efficiency.

The Role of Content Re-Optimization in Maintaining Relevance

In the realm of digital marketing, the practice of content re-optimization is vital for maintaining a site’s relevancy and its standing in Google search results. It involves the periodic revisiting of existing content to ensure it reflects the latest industry trends, algorithm updates, and user preferences.

By enhancing older web pages with new insights, fresh data, and improved optimization techniques, LinkGraph guides site owners to breathe new life into their content, thus bolstering its attractiveness to both visitors and web crawlers seeking to update the search engine index.

Incorporating User Feedback to Improve Content Value

Receptiveness to user feedback signifies the essence of adaptability for site content, aiming to enhance its value. By analyzing comments, surveys, and direct communications, LinkGraph enables a site to evolve precisely in tune with its audience’s needs. This integration of visitor input into content refinement ensures the material remains authoritative and relevant, significantly promoting indexing speed and SERP visibility.

Aligning content with user expectations is an astute strategy that LinkGraph adeptly orchestrates for its clients. It demonstrates a site’s commitment to quality and user satisfaction, fostering a collaborative environment where audience insights inform content creation, leading to enriched experiences for visitors and improved indexing outcomes for site owners.

| Aspect | Function | Benefit |

|---|---|---|

| User Comments | Gather direct feedback | Refine content relevance |

| Surveys | Measure audience interests | Align with user preferences |

| Direct Communication | Engage in dialogue | Build community and trust |

Streamlining Your Site: The Power of Content Pruning

In an ever-evolving digital world, site owners are recognizing the transformative impact of content pruning on the health and visibility of their sites.

This methodology isn’t simply about reducing quantity; it’s an intelligent approach to enhancing the quality of a site’s offerings.

By identifying and removing pages that underperform, site owners sharpen their site’s focus, which can lead to faster indexing and bolster its presence in Google search results.

Instituting a content removal schedule contributes to the site’s optimal functionality, ensuring that every page serves a purpose and supports the overall site health.

As site owners explore the nuances of content pruning, they uncover the plethora of benefits it delivers, not just to their website’s health, but also to their authority and ranking in the dynamic landscape of Google search.

Identifying and Removing Underperforming Pages

Through meticulous analysis, LinkGraph’s SEO services empower site proprietors to discern the performance metrics of their web pages. Highlighting pages that fail to contribute positively to the site’s SEO strategy, they readily identify content ripe for pruning, thus optimizing the site’s structure for Google’s indexing processes.

Elimination of underperforming content is not mere subtraction; it is strategic simplification. By excising these digital deadweights, LinkGraph ensures that every remaining page enhances the site’s thematic consistency, making for a swift, efficient crawl and indexation that solidifies the site’s standing in Google search results.

How to Set Up a Content Removal Schedule

Establishing a content removal schedule requires a systematic approach to audit a website efficiently: evaluating metrics to determine the contribution of each page to the site’s SEO effectiveness and user engagement. Site owners should pencil in regular audits, possibly on a quarterly basis, allowing enough time for content to perform and accrue meaningful data.

| Action | Frequency | Objective |

|---|---|---|

| Content Audit | Quarterly | Evaluate individual page performance |

| Data Analysis | During Audit | Identify underperforming pages |

| Content Removal | Post-Audit | Eliminate non-contributing pages |

Decisive action must follow the auditing process: linking the pruning schedule to concrete performance indicators such as traffic, bounce rates, and conversions. This data-driven approach ensures that content removal decisions bolster the site’s relevance and indexing potential, thereby enhancing its footprint on Google’s search results.

The Benefits of Content Pruning on Overall Site Health

Content pruning emerges as a critical strategy, enhancing the overall health of a site by facilitating more efficient crawling and indexing by search engines. By removing outdated or irrelevant pages, LinkGraph helps site owners concentrate their SEO efforts on content that performs, increasing the crawl budget for pivotal sections, thus elevating a site’s presence on Google.

A leaner site structure, a direct result of content pruning, can significantly reduce crawl errors and dead ends encountered by web crawlers, ensuring that valuable pages are indexed more rapidly. This meticulous curation of a site’s content library not only improves user experience but also signals to Google a commitment to quality, a factor that substantively influences Google search results rankings.

Ensuring Your Robots.txt Supports Indexability

Mastering the nuances of your site’s robots.txt file is essential for anchoring its presence on Google’s search landscape.

Missteps in configuring this critical text file can inadvertently hinder web crawlers from parsing through pages meant to be displayed prominently in search results.

Site owners seeking optimized indexing must be vigilant—ensuring robots.txt directives are meticulously crafted to facilitate rather than obstruct the discoverability of web content.

This introduction lays the groundwork for delving into the subtleties of avoiding common mistakes with robots.txt, configuring it to support search engine visibility, and performing checks to eliminate any unintended blocks—vital practices for ensuring the file serves as a beacon, not a barricade, for indexing endeavors.

Common Mistakes to Avoid With Robots.txt Files

Within the technical landscape of SEO, the robots.txt file functions as a critical gatekeeper, one that informs web crawlers which pages should be considered for indexing. A common oversight by site owners is the accidental instruction for search engines to ignore significant pages or directories, through the indiscriminate use of the “Disallow” directive, which can severely stunt a site’s presence within Google search results.

Another intricate aspect that demands careful attention is the syntax used in the robots.txt file. A minor typographical error or an incorrect pathway can inadvertently block crawlers, like Googlebot, from accessing content intended for indexation. Vigilance in maintaining clear and precise commands within this file is paramount for ensuring that a site’s valuable content is efficiently crawled and indexed, solidifying its search engine visibility.

How to Configure Robots.txt for Optimal Indexing

Configuring a robots.txt file for optimal indexing begins with identifying which content is key to a site’s SEO strategy. LinkGraph’s SEO services recommend specifying clear directives that guide web crawlers to the site’s most valuable pages, ensuring these critical assets are promptly indexed, thus hastening the site’s visibility on Google.

LinkGraph experts advise that maintaining an updated sitemap within the robots.txt file can serve as a roadmap for web crawlers, like Googlebot, directing them efficiently through the site’s architecture. This proactive step accentuates a site’s indexability, deploying resources where they are most impactful in boosting the site’s stature in Google search results.

Checking Your Robots.txt File for Unintentional Blocks

Site owners must rigorously inspect their robots.txt file to uncover any unintended instructions that obstruct web crawlers from indexing essential pages. LinkGraph’s suite of SEO tools includes features to audit and analyze robots.txt files, ensuring no valuable content is inadvertently shielded from Google’s view.

Regular evaluation of a robots.txt file is imperative, as unintended blocks can occur following updates or site modifications. LinkGraph provides expert assessments, guiding site owners through the critical process of verifying that every declaration in the robots.txt file aligns with their indexing goals and does not impede Googlebot’s access to indexable content.

Identifying and Resolving Inadvertent Noindex Tags

In the dynamic realm of website optimization, a site’s indexation by Google can be hampered by the inadvertent deployment of noindex tags.

These tags, when misapplied, can make pages invisible to search engines, thus undermining a site’s discoverability.

To navigate around this digital labyrinth, it is paramount for site owners to become adept at uncovering and rectifying rogue noindex tags.

Employing tools to identify these obstructions, adhering to best practices for scrutinizing indexing tags, and grasping the implications of misplaced noindex directives represent pivotal steps toward securing a website’s prominence on Google’s search platform.

Utilizing Tools to Find and Fix Rogue Noindex Tags

LinkGraph equips site proprietors with precise instruments designed to scour their digital territories for any unwittingly placed noindex tags. This advanced diagnostic capability ensures that all content destined for Google’s search results is visible to the tireless web crawlers that populate the index.

Comprehensive audits via LinkGraph’s SEO tools illuminate instances of noindex directives that may stifle a page’s chances for proper indexing: a crucial step that aligns the site’s content with Google’s meticulous indexing standards.

| SEO Challenge | Tool Function | SEO Benefit |

|---|---|---|

| Inadvertent Noindex Tags | Audit and Detection | Ensures Content Visibility for Crawling |

| Page Visibility Issues | Comprehensive Scans | Aligns Content with Google Indexing Standards |

Best Practices for Regularly Checking Your Indexing Tags

To safeguard a site’s index status, it is critical to schedule regular assessments of indexing tags. LinkGraph actively encourages site owners to integrate thorough inspections of noindex tags into their SEO review cycle, ensuring these directives are utilized intentionally and appropriately.

A site’s visibility in search engine results hinges on the meticulous examination of indexing signals sent to Googlebot and other web crawlers. LinkGraph’s expert services emphasize the importance of recurring validation of these crucial tags to preempt indexing oversights and sustain a site’s search engine presence:

- Conduct comprehensive site audits post-deployment and after significant content updates.

- Examine server responses to confirm proper tag implementation on both old and new pages.

- Utilize specialized tools provided by LinkGraph to streamline the audit process and detect any unintended noindex tags.

Understanding the Consequences of Improperly Placed Noindex Tags

When noindex tags are mistakenly applied, they yield a significant disconnect between a site’s content and its visibility on Google. Such errors lead to certain pages being excluded from search results, swiftly undermining a site’s ability to attract traffic and diminishing the reach of potentially valuable content.

Improper noindex tags can inflict lasting damage on a site’s SEO, impeding the efforts of webmasters to present their most compelling material to the digital audience. The inadvertent dismissal of pivotal pages from Google’s index remnants a silent void where vibrant user engagement should have flourished:

- Noindex tags placed in error bar entry to Google’s searchable web index, cloaking content from searchers.

- Crucial product and category pages affected by incorrect noindex directives may experience diminished visitor traffic.

- Miscalculations with noindex tags compromise a website’s ability to compete with other sites for search engine real estate.

Fine-Tuning Your Sitemap for Improved Indexing

As the digital landscape continues to expand, fine-tuning a sitemap presents a vital avenue for site owners to bolster indexing intricacies and thereby uplift a new site’s prominence on Google.

A sitemap acts as a navigational guide, enabling search engines to intelligently crawl and comprehend the complete architecture of a website.

With an optimized sitemap, owners can ensure every valuable page is accounted for and presented to Google in an organized fashion.

This optimization, involving essential elements of an effective sitemap, the inclusion of all pertinent pages, and regular audits, forms the linchpin for a more profound understanding by search engines, which in turn accelerates the indexing process and escalates visibility in search results.

Essential Elements of an Effective Sitemap

An effective sitemap serves as an invaluable indexing aide, succinctly communicating a website’s layout to search engines. It is meticulously structured, ensuring that every significant URL is listed, making the vast tapestry of content easily navigable by search engines like Google.

Incorporating priority tags within the sitemap is of paramount importance, as it specifies the relative significance of pages and signals to crawlers those that warrant prompt attention. This hierarchization aids Googlebot in discerning the core pages that exemplify a site’s essence, thereby enhancing the indexing efficacy for those pivotal areas.

How to Ensure All Relevant Pages Are Listed in Your Sitemap

To ensure comprehensive visibility on Google, site owners must meticulously confirm that their sitemap includes all relevant pages. This includes examining and verifying that each product page, category page, and authoritative blog post is articulated within the sitemap structure, thereby facilitating Googlebot’s ability to navigate and index the site’s content efficiently.

LinkGraph’s SEO services advocate for a thorough review of a website’s sitemap to identify and include any new or updated URLs, ensuring none slip through the cracks. Regular audits, performed with the assistance of LinkGraph’s skilled team, confirm that the sitemap remains an accurate reflection of the site’s current layout, critically supporting the content’s indexability and search engine accessibility.

Regular Sitemap Audits for Enhanced Google Understanding

Conducting regular sitemap audits is vital for affirming a site’s clarity to Google’s algorithms. These audits ensure the search engine’s understanding of site architecture maintains pace with ongoing changes, thereby accelerating indexation and augmenting visibility.

Schedule and execution of routine sitemap assessments keep a website’s navigational blueprint polished and precise:

- Identifying newly added or altered URLs for prompt inclusion.

- Ensuring priority levels within the sitemap align with the current strategic focus of content.

- Verifying the removal of obsolete or redundant listings to present a streamlined sitemap to Googlebot.

This proactive approach not only secures optimal crawl efficiency but also reinforces the alignment between the site’s content strategy and Google’s indexing priorities.

Avoiding the Pitfalls of Incorrect Canonical Tags

In the intricate dance of search engine optimization, one misstep with canonical tags can stumble indexing efforts, causing significant setbacks in a new site’s conquest for Google presence.

Canonical tags, when employed correctly, consolidate search authority by steering search engines towards the most definitive version of similar or duplicate content.

However, if mismanaged, these tags can derail web crawlers, leading to indexing paralysis and a diluted site presence.

This introduction paves the way for a deeper exploration into identifying and resolving rogue canonical tags, ensuring their meticulous verification and correction, and delving into their profound impact on shaping a new site’s visibility in the digital arena.

How Rogue Canonical Tags Can Impede Indexing

Rogue canonical tags create significant obstructions within the indexing journey. By erroneously guiding search engines to consolidate indexing efforts on the incorrect page, these misleading directives impede a site’s ability to present its intended primary content to Google, diluting its search engine presence and undermining deliberate SEO strategies.

Careful delineation of canonical indications is critical to maintain index alignment and prevent the scattering of search engine authority. Misguided signals sent to Google via improper canonical tags lead to a muddled understanding of a site’s content hierarchy:

- Distorting search engine insights into page significance.

- Directing valuable indexing resources away from the actual pivotal content.

- Jeopardizing content’s search rankings and visibility.

Steps to Verify and Correct Your Site’s Canonical Tags

In the meticulous process of SEO, verification of canonical tags is paramount. LinkGraph’s robust SEO services furnish site owners with the necessary tools to conduct exhaustive audits, evaluating the use and accuracy of canonical tags to confirm their correct implementation. This critical step ensures that search engines are concisely directed to the primary version of content, thereby preserving the integrity of a new site’s indexation and search visibility.

Correction of canonical tags, when anomalies are identified, is an urgent task to maintain a coherent site structure. The expertise provided by LinkGraph enables swift remediation, updating tags to reflect the intended hierarchy of content. Such timely interventions prevent search engines from diluting a site’s authority, securing its rightful place within the competitive landscape of Google’s search results.

The Impact of Canonicalization on New Site Presence

The strategic implementation of canonicalization exerts a profound impact on a new site’s visibility in Google’s search ecosystem. Correct canonical tagging ensures that search engines identify and index the version of content intended to represent the site, bolstering the new site’s authority and search presence.

Precisely executed canonicalization streamlines the user experience by presenting consistent and authoritative content, which is instrumental in attracting and retaining an engaged audience. The consolidation of page authority through accurate canonical tags amplifies a site’s signal of relevance to search engines:

- Emphasizes the primary content to search engines, improving crawl efficiency.

- Prevents dilution of page authority, bolstering the site’s search rank potential.

- Ensures users are guided to the most relevant and informative content, fostering trust and authority.

Strengthening Site Structure With Internal Linking

In an online ecosystem teeming with competition, the architecture of a new site’s internal link framework is fundamental to securing its place within Google’s search domain.

Meticulous crafting of powerful internal links anchors the SEO strategy, serving as conduits that channel the flow of page authority and enhance the discoverability of content.

It falls to site owners and developers alike to master internal linking techniques—a task that not only fortifies the navigational resilience of a site but also magnifies its indexing potential.

By embedding a network of strategic internal links, they set the stage for a website that is rich in page authority, optimized for user experience, and primed for a rapid and comprehensive inclusion in Google’s coveted index.

The Importance of Powerful Internal Links for SEO

In the competitive arena of search engine optimization, adeptly structured internal linking stands as a central pillar. It cultivates a robust navigational network that allows both users and search engines to traverse a new site with ease, boosting its credibility and enhancing the user experience.

Through the strategic deployment of internal links, site owners effectively distribute page authority across their domain, ensuring key sections receive deserved prominence. This internal link architecture is instrumental in signaling to Google the hierarchy and content value within a site, which is pivotal in driving its search engine rankings upward.

Techniques to Build a Strong Internal Link Network

To build a robust internal link network, LinkGraph recommends crafting organic connections between individual web pages that guide visitors with logical precision and relevance, fostering a seamless navigational experience. Such practical link placement allows Google’s algorithms to discern a clear content hierarchy, underscoring the most significant pages and enhancing the site’s overall thematic strength.

Implementing contextually relevant anchor text for internal links serves as a non-intrusive guidepost for users and web crawlers alike, reinforcing the subject matter of linked pages and assisting Googlebot in understanding a site’s interrelated topics. This technique solidifies the foundation of a site’s architecture, enabling Google’s search engine to index content with enhanced accuracy, benefaction to both user engagement and site visibility.

How Internal Linking Can Boost Indexing and Page Authority

Internal linking serves as the backbone of a site’s indexing strategy, ensuring that Google’s web crawlers can discover and understand the breadth of content available. By crafting a network of links that tie related content together, LinkGraph can significantly lift a site’s index status, reinforcing each page’s role in the tapestry of the site and hastening its inclusion in the search engine’s index.

Moreover, a strategic internal linking framework elevates page authority by distributing the SEO strength across the site’s landscape. When executed with precision, LinkGraph helps new sites build a robust internal structure where high-value pages support each other, contributing to a reinforced presence in Google search results and making the content more accessible to potential visitors.

Expedite Indexing With Google Search Console Submissions

In an ever-competitive digital landscape, the importance of promptly securing your site’s visibility on Google cannot be overstated.

For site owners, the Google Search Console emerges as an indispensable asset in this quest.

Mastering its submission process can dramatically accelerate the indexing of new web pages, leading to quicker inclusion in search results.

This section unveils the keys to effective use of the Search Console, from leveraging the URL inspection tool to judiciously submitting pages for indexing.

Site owners must also understand how to diligently track their indexing status, ensuring their content is properly recognized and served up by Google’s algorithms.

Ensuring adept use of these tools is critical for augmenting a site’s online footprint and making impactful connections with an engaged audience.

A Guide to Submitting Your Pages via Google Search Console

Google Search Console offers a pivotal platform for site owners dedicated to intensifying their website’s visibility in Google search results. Leveraging the URL Inspection Tool permits a precise submission of new or updated pages directly to Google for indexing: a critical step in expediting a site’s presence among search outcomes.

Upon submission, site proprietors receive a detailed report on the indexing status of their pages, enabling them to identify and remedy any barriers that could impede visibility on Google. This proactive measure ensures that all facets of a site are navigable and accessible for both users and search engines, fostering an authoritative online presence.

| Action | Tool Used | Outcome |

|---|---|---|

| Submission of Pages | URL Inspection Tool | Direct Indexing Submission to Google |

| Status Evaluation | Search Console Report | Insights into Indexing Hurdles and Verification |

When and How to Use the URL Inspection Tool

The URL Inspection Tool stands as a beacon for site owners navigating the complexities of Google Search Console. It enables a meticulous examination and submission of individual pages, ensuring that content is not only present but readily accessible for Google’s indexing process.

Proper utilization of the URL Inspection Tool involves inputting the exact URL to be assessed into the console, followed by a thorough analysis that divulges the current index status. Site owners are then empowered to request indexing for the page, a critical step that bridges the gap between content creation and its visibility on Google’s SERP.

Tracking Indexing Status Post-Submission in Search Console

After submitting a page for indexing through the Google Search Console, vigilant site owners turn to the corresponding reporting feature for tracking their request’s progress. Monitoring indexing status becomes a critical step, ensuring that Google’s search architecture recognizes and integrates new content efficiently.

The reporting dashboard within the Search Console provides a timeline of the indexing process: from the initial scan by Googlebot to the final inclusion in Google’s search index. Site owners can thus clearly understand the current indexation status of their pages, adjusting their SEO strategy as necessary to address any indexing issues revealed.

| Stage | Action | Outcome |

|---|---|---|

| 1. Submission | Page URL submitted via Search Console | Request enters Google’s indexing pipeline |

| 2. Tracking | Monitor report in Search Console | Indexing status and progress are revealed |

| 3. Analysis | Review feedback and act accordingly | Refine SEO approach to optimize indexation |

Leveraging Plugins for Instant Indexing

In their quest for digital dominance, savvy site owners seek cutting-edge solutions that promise swift entry into the world of Google search results.

Instant indexing plugins emerge as the paragons of such facilitation, offering an expedited path for new content to be recognized and assimilated by Google’s algorithm.

This subsection introspects the intricacies involved in deploying Rank Math’s Instant Indexing Plugin, outlines the critical steps for setting up such plugins to achieve immediate Google indexing, and explores methodologies to gauge the efficacy of these potent indexing tools.

The nexus of these discussions pivots on providing site owners with actionable insights that harmonize with Google’s evolving digital ecosystem.

Introduction to Rank Math’s Instant Indexing Plugin

The landscape of swift digital indexing is redefined with the advent of Rank Math’s Instant Indexing Plugin, heralding a new era of enhanced online visibility. This innovative tool is ingeniously designed to interface directly with Google’s indexing API, streamlining the process of getting new or updated content swiftly recognized and cataloged by Google’s search algorithms.

Rank Math’s Instant Indexing Plugin stands as a game-changer for site owners, enabling them to bypass the traditional waiting periods associated with content discovery. It equips webmasters with the means to propel their site’s pages to the forefront of Google’s index, fostering expedited search engine visibility and opening avenues for increased web traffic and user engagement.

Setting Up Plugins for Immediate Google Indexing

To facilitate immediate Google indexing, the adept configuration of indexation plugins is imperative. LinkGraph’s seasoned SEO specialists assist clients in setting up such plugins, ensuring they are properly connected to Google’s indexing API, which can lead to the accelerated inclusion of content in search results.

Site owners are equipped with this advanced capability, meticulously implemented to seamlessly integrate new content with Google’s indexing processes. This approach negates the typical delays in content recognition, offering a strategic advantage in engaging with search audiences swiftly.

Measuring Effectiveness of Instant Indexing Tools

To gauge the success of instant indexing tools, site owners must analyze the velocity and completeness of content assimilation within Google’s database. LinkGraph accentuates the importance of monitoring search result appearance, offering a clear indicator of how quickly and efficiently the plugin communicates with Google’s indexing framework, crucial for a new site’s strategic online positioning.

Assessment of these indexing tools involves meticulously tracking the progress of submitted URLs through Google Search Console, where LinkGraph aligns this data with organic search traffic and user behavior analytics. This comprehensive approach ensures site proprietors can validate the direct impact of instant indexing technology on their site’s discoverability, optimizing their digital presence on Google’s robust platform.

Enhancing Site Quality for Quicker Indexing and Ranking

In the contemporary digital realm, the alchemy of bolstering a site’s quality is tantamount to ensuring its swift indexing and prominence on Google.

Mastery in the arts of on-page and technical SEO offers a distinct pathway for new sites to crystallize their search engine potential, transforming the virtual blueprint into a locus of user engagement and organic discovery.

As website custodians pursue optimization, they navigate through methodologies designed to elevate user experience, ultimately encouraging organic indexing.

Embracing a philosophy of persistent refinement, LinkGraph nurtures site owners through focused enhancement strategies, with a keen eye on the evolution of search engine criteria and user expectations, to fortify a new site’s footing in the competitive hierarchy of Google search results.

Best Practices for on-Page and Technical SEO

Attaining prominence in Google’s search results necessitates a steadfast application of on-page and technical SEO best practices. Site owners must ensure that title tags, meta descriptions, and header tags are not only accurately reflective of their content but are also optimized with targeted keywords to entice search engines and users alike. This focus on on-page elements, coupled with a mobile-responsive design and page speed optimization, lays a robust foundation for both indexing and user experience.

Technical SEO also plays a crucial role in preparing a new site for indexing, requiring a meticulously structured site architecture and clean, efficient code. Utilizing LinkGraph’s SEO services, site owners can perform thorough site audits to identify and rectify technical issues such as broken links, duplicate content, and crawl errors that can undermine a site’s search engine compatibility. These proactive technical enhancements assist Googlebot in navigating the site and contribute to a more favorable indexing and ranking outcome.

Optimizing User Experience to Encourage Organic Indexing

Ensuring an optimized user experience is not only beneficial to visitors but also critical in signaling a new site’s readiness for organic indexing by Google. By crafting an intuitive and engaging interface, LinkGraph aids site owners in reducing bounce rates and increasing session duration, factors that Google’s algorithms reward with improved indexing potential.

Within the ecosystem of digital impressions, LinkGraph emphasizes the significance of fast-loading pages and straightforward site navigation to furnish a gratifying user experience. Such optimization facilitates the organic discovery of content by Google’s web crawlers, streamlining the indexing process and accentuating the site’s search engine presence.

Continuous Improvement Strategies for Site Optimization

In the dynamic pursuit of SEO excellence, LinkGraph champions continuous improvement strategies to maintain and advance a site’s optimization. With a commitment to perpetual refinement, regular audits elucidate areas for technical SEO enhancement, enabling swift identification and resolution of on-page obstacles that could hinder Google’s indexing efficiency and search ranking prowess.

LinkGraph’s rigorous focus on incremental evolution ensures that a website’s adaptability aligns with the ever-changing algorithms of search engines. Proactive updates and refinements in site structure and content, informed by ongoing performance analysis, consolidate the foundation for a website to achieve sustained growth in search engine visibility and higher rankings in Google search results.

Conclusion

In conclusion, accelerating indexing and elevating a new site’s presence on Google is crucial for ensuring online prominence and audience engagement.

Site owners should adopt a range of strategies, including creating high-quality content that resonates with Google’s E-A-T criteria, optimizing their robots.txt and sitemap for search crawlers, and employing internal linking to enhance site structure and page authority.

Utilizing tools like Google Search Console and instant indexing plugins can further expedite the indexing process.

Regular audits and refinements in line with SEO best practices ensure that the site remains aligned with the latest search engine algorithms and user preferences.

By meticulously applying these techniques, site owners can effectively boost their site’s visibility and ranking on Google, capitalizing on the potential to attract significant traffic and establish their site’s authority in the digital marketplace.