SEO Issues

Navigating SEO Challenges: How to Identify and Resolve Common SEO Issues In the dynamic realm of Search Engine Optimization, recognizing and resolving SEO issues stand as the […]

In the dynamic realm of Search Engine Optimization, recognizing and resolving SEO issues stand as the linchpin for securing prime positioning on search engine results pages.

Site owners and SEO professionals alike confront a myriad of challenges, from optimizing XML sitemaps and ensuring efficient site indexing to amplifying site speed and addressing content duplication.

Enhancing a website’s SEO performance is not a one-off task but a continuous endeavor that requires a deep understanding of both fundamentals and nuanced search engine behavior.

LinkGraph’s SEO services offer strategic solutions tailored to navigate these complexities, bolstering website performance and user experience.

Keep reading to discover actionable insights and advanced strategies that can transform your SEO approach and elevate your online presence.

Key Takeaways

- LinkGraph’s SEO Services Utilize Advanced Tools and Strategies to Address SEO Issues and Enhance Search Rankings

- Regular SEO Audits, Including Site Speed and Indexability Assessments, Are Integral to Maintaining a Website’s Health and Visibility

- Structured Data and the Correct Use of HTML Tags Such as Canonicals Are Important for Communicating With Search Engines and Avoiding Content Duplication

- Ongoing Optimization, Including Monitoring and Refining of robots.txt and XML Sitemaps, Is Necessary for Effective Search Engine Indexing

- Continuous Monitoring and Analysis of SEO Performance Is Essential for Achieving and Sustaining Improved User Engagement and Search Visibility

Identifying Key SEO Challenges in Your Strategy

In the realm of digital marketing, the mastery of search engine optimization is pivotal.

As site owners unravel the intricacies of SEO practices, recognizing and rectifying prevalent SEO issues becomes the linchpin for maximizing visibility on the search engine results page.

Companies need to embark on a meticulous assessment of their current SEO performance, discerning the root cause of faltering rankings within their unique digital footprint.

An astute comprehension of search algorithms’ influence on SEO, coupled with a vigilance against prevailing misconceptions, forms the foundation of a robust strategy.

This scrutiny paves the way for enhanced user experience and website performance, ultimately guiding site visitors efficiently along the pathways of a client’s digital domain.

Assessing Your Current SEO Performance

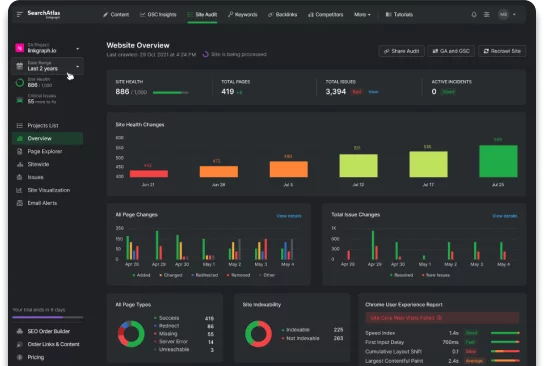

A thorough assessment of SEO performance demands an in-depth analysis of various metrics, including traffic patterns, bounce rates, and conversion figures. A reputable provider like LinkGraph utilizes advanced tools such as SearchAtlas SEO software to scrutinize these details, equipping site owners with a wealth of actionable data. Only by understanding the nuances reflected in this data can a company effectively fine-tune its online presence.

To accurately gauge the effectiveness of an SEO Strategy, regular audits are indispensable. SEO professionals may offer a free SEO audit, giving a snapshot of a website’s health and revealing issues like suboptimal site speed or a convoluted user pathway. These insights lay the groundwork for strategic improvements and position a client’s online portal for elevated search engine landings.

Pinpointing the Main Issues Impacting Your Rankings

Pinpointing the precise issues impacting your rankings involves a keen analysis of backlinks, site structure, and content relevance. LinkGraph, through meticulous review, identifies factors such as a proliferation of low-quality backlinks or the absence of an effective internal linking structure that can cause search engines to undervalue a website.

Equally critical is the examination of on-page elements such as title tags, H1 headings, and meta descriptions to ensure they align with targeted keywords and user intent:

- Evaluating the robustness of the current backlink profile with free backlink analysis tools,

- Inspecting on-page SEO elements for optimization, such as title tags and H1 headings,

- Ensuring that content is relevant, informative, and resonates with the target audience,

- Analyzing the website’s technical SEO issues that could hinder search engine crawlers.

By leveraging SearchAtlas SEO software, LinkGraph expertly navigates these complexities, providing tailored SEO services that address underlying deficiencies and bolster search rankings in alignment with client goals.

Understanding the Role of Search Algorithms in SEO

Grasping the nuances of search algorithms is crucial for mastering SEO as they are the invisible arbiters of online success. LinkGraph’s seasoned experts possess a deep understanding of these complex systems, implementing SEO strategies that are not only compliant but also predictive of algorithmic trends. They harness this knowledge to enhance the SEO performance of their client’s websites, ensuring they meet the ever-evolving standards of search engines.

At the core of LinkGraph’s approach lies the recognition that algorithms prioritize user experience, relevancy, and site authority. Their white-label SEO services and SEO content strategies are therefore crafted to align with these principles, positioning websites to perform optimally in the eyes of these sophisticated digital evaluators. This strategic alignment is essential; it determines a website’s capacity to rise through the ranks and secure a dominant position on the search engine results page.

Checking for Common SEO Misconceptions

Common SEO misconceptions can obstruct the pathway to effective optimization, leading to flawed strategies that dampen SEO effectiveness. One misguided belief is that keyword stuffing will boost SEO rankings; however, modern search algorithms are fine-tuned to prioritize content quality over keyword quantity.

Another widespread fallacy is that SEO is a one-time setup, rather than an ongoing process. The dynamic nature of search engine algorithms demands continuous refinement of SEO practices to maintain and enhance visibility and rankings:

| SEO Misconception | Reality |

|---|---|

| Keyword stuffing improves rankings | Quality content holds more value |

| SEO is a set-and-forget task | Continual SEO efforts are essential |

Tackling the Complexities of Site Indexing

The cornerstone of a successful search engine optimization (SEO) strategy lies in the assurance that search engines can discover and index a website’s content.

Without proper indexing, a website becomes invisible to potential visitors, no matter the quality of its content or the strength of its backlinks.

Professionals must conduct a thorough evaluation of a site’s indexability to identify any obstacles that impede Search Engine Crawlers.

Effective diagnosis of indexing errors is a critical component of SEO, which sets the foundation for correcting index-related SEO issues and ensures that each webpage is appropriately represented in search engine databases.

LinkGraph’s SEO services specialize in scrutinizing and resolving such discrepancies, paving the way for improved online visibility and seamlessness in user navigation.

Evaluating Your Site’s Indexability

Navigating the labyrinthine process of site indexing requires a discerning analysis to ensure each web page is accessible and visible to search engine crawlers. LinkGraph’s SEO professionals employ comprehensive evaluations of indexability, meticulously checking for the presence of XML sitemaps and the correct utilization of “noindex” tags, laying the groundwork for unimpeded discovery of a site’s content by search engines.

LinkGraph’s SEO services include a thorough inspection of a website’s architecture, pinpointing any technical SEO issues, such as improperly configured robots.txt files or orphan pages, which might hinder a search engine’s ability to index content effectively. This attention to detail is critical for establishing a digital presence that is both discoverable and favorably evaluated by search engines.

Diagnosing Indexing Errors and Omissions

Diagnosing indexing errors often starts with the meticulous review of crawl reports and log files: LinkGraph’s SEO services utilize these resources to detect patterns that signal issues such as server errors or misplaced noindex directives. Identifying these hindrances is a critical step toward ensuring full visibility across all search engines.

Upon uncovering these omissions, swift action is taken to rectify HTML tags and HTTP statuses which, if left unaddressed, could significantly detract from search engine understanding and user accessibility:

| Indexing Error | Common Cause | Resolution Tactic |

|---|---|---|

| Orphan Pages | Lack of Internal Links | Integrate into Site’s Navigation Structure |

| 404 Errors | Deleted or Moved Content | Implement 301 Redirects or Restore Content |

| Blocked by robots.txt | Overly Restrictive Rules | Modify robots.txt File |

Complementing these efforts, the application of LinkGraph’s SEO audit unveils other potential indexing barriers, enabling web owners to construct a precise and efficacious roadmap to SEO success. This methodical approach ensures every valuable page is indexed accurately, bolstering a website’s comprehensive SEO potential.

Implementing Fixes for Index-Related SEO Issues

Upon the unveiling of index-related shortcomings, swift and precise remediation is imperative. LinkGraph’s SEO services streamline the implementation of corrections with an acute focus on expediting indexation and mitigating impediments to search engine crawlers.

Site owners are empowered to execute effective fixes for SEO issues such as incorrect robots.txt configurations or missing alt attributes in images:

- Revising the robots.txt file to ensure essential pages are not inadvertently blocked from indexing,

- Adding or correcting alt tags to images to enhance web accessibility and search engine recognition,

- Repairing or redirecting broken links to prevent 404 errors and ensure cohesive site structure.

Such targeted interventions, spearheaded by LinkGraph’s expertise, not only correct indexing discrepancies but also forge the path for improved overall website health and elevated search rankings.

Mastering the Art of XML Sitemap Optimization

In the digital terrain of SEO, crafting an impeccable XML sitemap is a critical yet often underappreciated component.

It serves as an essential signal flare to search engines, illuminating the structure of a website and guiding crawlers to its most significant pages.

Piecing together a comprehensive XML sitemap demands attention to the intricacies of site architecture and involves a deliberate arrangement of its contents to reflect priority and relevance.

Rigorous submission and testing protocols follow, certifying that the sitemap functions as an accurate index, streamlining the path for search engines to appraise and index the website’s content effectively.

Creating a Comprehensive XML Sitemap

In the pursuit of SEO excellence, creating a comprehensive XML sitemap is not merely a technicality; it’s a strategic move towards improved site visibility and indexing. A sitemap effectively communicates a site’s structure to search engines, delineating the hierarchy and importance of pages, thereby facilitating more efficient crawling and indexing.

LinkGraph’s SEO services tackle this critical task with precision, focusing on the meticulous curation of sitemaps to ensure they cover all vital pages. Such inclusivity promotes the swift discovery and indexation of content by search engines, laying a solid foundation for enhanced digital presence:

| Action | Purpose | Outcome |

|---|---|---|

| Strategic Inclusion of Pages | Guide Crawlers to Key Content | Streamlined Indexing |

| Meticulous Curation | Accurate Site Representation | Optimized Search Engine Understanding |

Through this dedication, LinkGraph ensures each XML sitemap not only addresses the basic requirements but also serves as a powerful tool to boost a site’s SEO performance.

Ensuring Proper Site Structure in Your Sitemap

Ensuring an accurate reflection of site structure within your XML sitemap is essential for effective SEO management. A sitemap should organize web pages in a hierarchy that mirrors their importance and relevance to the site’s overall theme and desired user journey.

Achieving optimal site structure within a sitemap necessitates deliberate orchestration of its contents. Proper site structure ensures that search engines prioritize the crawling and indexing of a site’s most pertinent pages:

- Strategically categorizing pages to reflect their significance in the site hierarchy.

- Leveraging priority attributes within the sitemap to indicate the relative importance of pages.

- Regular updates to the sitemap to incorporate new pages and account for any removed content.

Submitting and Testing Your XML Sitemap

Submitting an XML sitemap to search engines is a decisive step towards ensuring all content is available for crawling and indexing. LinkGraph’s SEO services facilitate this key procedure, guaranteeing that sitemaps are promptly delivered to search engine consoles, thereby promoting immediate recognition and handling of the website’s Structured Data.

LinkGraph recognises the importance of vigilance in sitemap submission, initiating rigorous testing to confirm that search engines correctly parse and utilize the sitemap’s information. Through this careful verification, any issues can be swiftly identified and rectified, enhancing the likelihood of optimal search engine rankings and improved SEO performance.

Ensuring robots.txt Facilitates Optimal Crawling

In the complex domain of SEO, the robots.txt file acts as a gatekeeper, guiding search engine crawlers through the vast corridors of a website’s content.

Properly adjusting this critical component is crucial for ensuring that vital site pages are accessible for indexing, while non-essential sections remain off-limits, thereby streamlining the crawling process.

This segment delves into methods for analyzing, refining, and testing the robots.txt file, each step a tactical move to optimize the delicate dialogue between a website and the search engines that hold the keys to its visibility.

Analyzing the Current State of Your robots.txt File

Robust SEO strategies necessitate an examination of the robots.txt file to ascertain its alignment with the website’s overall SEO objectives. LinkGraph’s SEO services ensure close inspection, identifying any directives within the file that may inadvertently bar search engine crawlers from accessing key site content, a practice that could undermine a website’s search visibility and effectiveness.

A detailed analysis of the robots.txt file is foundational for any SEO regimen, aiming to optimize the directives such that they neither overexpose the site to crawlers nor cloak significant pages. LinkGraph adeptly conducts this analysis, ensuring the robots.txt file aids in achieving the delicate balance between accessibility and protection of sensitive areas of the site, thus refining crawling efficiency and SEO outcomes.

Adjusting Your robots.txt to Improve Search Engine Access

Adjusting the robots.txt file is a strategic necessity to grant search engines optimal access to a website’s content while safeguarding sections that should remain private. LinkGraph’s SEO services facilitate this critical adjustment, enhancing the dialogue between a site’s architecture and search engine crawlers to promote effective indexing.

Refinements to the robots.txt file must be meticulously strategized to prevent inadvertent blocking of valuable content as well as the unnecessary exposure of sensitive areas:

- Strategically allowing or disallowing user-agents to direct search engine crawlers.

- Specifying sitemap locations to direct crawlers toward the most crucial content for indexing.

- Regular reviews and updates to the robots.txt in response to changes in site structure or search engine behavior.

Testing robots.txt Changes and Their Effect on SEO

Testing the modifications to a robots.txt file is essential for ensuring they yield the desired SEO impact. Through validation tools, LinkGraph’s SEO professionals can identify whether search engine crawlers are correctly interpreting the instructions, ensuring the appropriate pages are crawled and indexed for robust search engine visibility.

When LinkGraph’s experts implement changes to the robots.txt file, rigorous testing follows to ascertain their effect on a site’s SEO landscape:

- Investigation of crawler behavior to confirm access to the correct pages.

- Monitoring of site traffic to detect any shifts that may result from the updates.

- Evaluation of page indexing statuses within search console tools to ensure no valuable content is unintentionally excluded.

Such critical assessments form part of a comprehensive SEO strategy, allowing for the fine-tuning of crawl directives to maximize website performance on search engine results pages. LinkGraph’s meticulous approach facilitates informed decisions that strengthen a website’s SEO footprint.

Revamping Page Speeds to Boost SEO Rankings

In the digital age, a website’s loading speed is a critical determinant of its online success, influencing both user satisfaction and search rankings.

An expeditious page load speed is no longer a convenience but a necessity, as users demand quick access, and search engines reward sites that deliver it.

Recognizing the multifaceted impact of swift loading times, the subsequent exploration addresses the essential processes of auditing site speed, unveiling factors that contribute to latency, and applying strategic modifications to enhance overall performance.

By elevating page speed, businesses can significantly sharpen their competitive edge, delivering an expedited user experience that aligns with top digital marketing standards and SEO best practices.

Auditing Your Site’s Loading Speed

An audit of a site’s loading speed is a foundational step that LinkGraph’s SEO services undertake to pinpoint elements impacting site performance. By harnessing sophisticated tools, the process reveals the extent to which page load speed contributes to a site’s SEO rankings and user satisfaction.

Following the audit, a data-driven approach guides the optimization of a website’s speed metrics. LinkGraph delves into the analytics, mapping out a pathway to enhance site responsiveness and loading efficiency:

| Speed Metric | Standard Benchmark | Implications for SEO |

|---|---|---|

| First Contentful Paint (FCP) | Under 1 Second | Improved User Engagement |

| Time to Interactive (TTI) | Under 5 Seconds | Better User Experience and Potential Higher Rankings |

| Total Blocking Time (TBT) | Less than 300ms | Reduced User Frustration and Bounce Rate |

Within this strategic phase, LinkGraph meticulously addresses various technical elements to streamline the user’s journey, thereby reinforcing the website’s stance in the competitive SEO landscape.

Identifying Factors That Slow Down Your Website

LinkGraph’s SEO services extend their expertise to detect the multitude of variables that impede website responsiveness. Among these, oversized images and inefficient code stand out as common culprits, requiring in-depth technical audits to identify and address these speed-defying roadblocks.

For comprehensive site performance optimization, LinkGraph scrutinizes external scripts and plugins which frequently contribute to prolonged loading times. This scrutiny equips site owners with the necessary insights to prune excess weight from their digital assets, leading to more rapid page rendering and an enhanced user experience.

Implementing Changes to Improve Overall Page Speed

In response to the findings of a comprehensive site speed audit, LinkGraph’s SEO services spring into action, implementing targeted changes to bolster a website’s page load speed. This could include optimizing images for quicker loading, leveraging browser caching, and refining server response times to ensure a seamless user experience and a potential uptick in SEO rankings.

Finding harmony between design and functionality, LinkGraph’s SEO experts meticulously refactor code, remove render-blocking JavaScript, and minify CSS files. Such modifications are engineered to minimize load times and deliver content to users with efficiency, directly contributing to improved site performance in search engine assessments.

Mitigating the Impact of Duplicate Content on SEO

The digital landscape is rife with challenges that threaten the integrity of SEO efforts, and one of the most pervasive issues is the presence of duplicate content.

As an inadvertently common SEO problem, duplicate content can dilute the ranking potential of web pages, create confusion for search engines, and sabotage the user experience.

With a focus on nurturing SEO health, identifying instances of duplicate content becomes a procedural necessity for webmasters.

Strategies for resolving duplicate content issues require tactical finesse, while the strategic implementation of canonical tags offers a salve for managing similar content without compromising SEO performance.

Vigilance in these areas ensures that content remains unique and authoritative, fortifying its ability to rank meaningfully on search engine results pages.

Identifying Instances of Duplicate Content

LinkGraph’s meticulous SEO services shine a light on the shadowy issue of duplicate content, a pervasive concern that threatens the SEO performance of countless websites. Their seasoned experts utilize sophisticated tools and a robust SEO strategy to systematically detect and catalogue instances where content repetition may detract from website uniqueness and search relevance.

Through precise analysis, LinkGraph identifies areas where the same content appears across multiple URLs, a practice that can puzzle search engines and split click-through rates, thus weakening a site’s SEO power. Resolving these issues is foundational to maintaining clear, authoritative signals to search engines, enhancing the visibility and ranking potential of the client’s digital content.

Strategies for Resolving Duplicate Content Issues

LinkGraph’s SEO services adeptly tackle the thorny issue of duplicate content, offering strategic solutions designed to preserve the integrity of a website’s SEO standings. By implementing 301 redirects, their experts effectively consolidate multiple pieces of similar content, directing all user and search engine traffic to a single, authoritative URL, which enhances the clarity of the site’s relevance signals to search engines.

Furthermore, the application of canonical tags is a pivotal strategy employed by LinkGraph to manage content that is intentionally similar or syndicated. This approach conveys explicit directives to search engines about the preferred version of a web page, securing the deserved search equity and eliminating harmful effects on the site’s SEO performance.

Using Canonical Tags to Manage Similar Content

In the intricate maze of SEO, canonical tags emerge as a beacon of clarity amidst the murkiness of similar or duplicate content. These HTML attributes serve as a signal to search engines, indicating the “master copy” of a page to be indexed, thereby warding off potential search ranking issues:

- LinkGraph’s SEO services strategically employ canonical tags to consolidate link equity across duplicate versions of content.

- By designating an authoritative source URL, these tags guide search engines to attribute all relevant metrics to a single, preferred webpage.

- Such precision ensures a unified, powerful presence on search engine results pages, bolstering the integrity of the website’s SEO profile.

Hand in hand with thorough content audits, LinkGraph’s utilization of canonical tags stands out as a surgically precise solution. Their application guarantees that a website’s content hierarchy remains pristine and effectively communicates the intent to search engines, fortifying the website’s competitive stance in the digital world.

Leveraging Structured Data for Enhanced SEO Benefits

In the dynamic process of search engine optimization, structured data stands out as a formidable tool savvy marketers and site owners utilize to communicate with search engines more effectively.

By embedding structured data into a website, clarity is added to the site’s content, enabling search engines to categorize and present information in innovative and engaging ways.

This section casts light on the various types of structured data, elucidates the integration process into web infrastructures, and underscores the necessity of monitoring structured data’s influence on SEO performance.

Grasping the gravitas of structured data unlocks new realms of potential for digital entities to stand out on the search engine results page and satisfy user queries with unprecedented precision.

Understanding Different Types of Structured Data

Understanding the various types of structured data is essential for enhancing a website’s communication with search engines. Embracing schema markup, for instance, equips search engines with explicit context regarding a page’s content, allowing for more accurate and richly featured snippets in search results.

Structured data types range from articles, local businesses, and events to products and recipes, each providing unique opportunities to improve visibility and user engagement on the search engine results page:

- Article schema highlights key elements of news or blog posts to stand out in search results.

- Local business schema optimally showcases business details like address, hours, and reviews.

- Event schema helps users discover events directly through search results with essential details.

- Product schema allows ecommerce sites to display pricing, availability, and ratings prominently.

- Recipe schema attracts cooking enthusiasts with structured data like ingredients and cook times.

Integrating Structured Data Into Your Website

Integrating structured data into a website is a precise undertaking, beginning with the selection of relevant schema markup depending on the industry and the content’s nature. LinkGraph’s SEO services facilitate this integration by employing the appropriate JSON-LD or Microdata formats, ensuring that the structured data is implemented correctly and efficiently into a site’s codebase, paving the way for enhanced search visibility.

To corroborate that structured data is functioning as intended, LinkGraph conducts a meticulous validation process using tools provided by search engines:

- Testing structured data with validation tools ensures search engines correctly interpret the markup.

- Monitoring the performance impact post-integration confirms the efficacy of structured data in improving SERP features.

- Continual updates to the structured data schema keep it aligned with the latest search engine guidelines and best practices.

This thorough deployment and ongoing refinement of structured data form a cornerstone of successful SEO strategies, and with LinkGraph’s expert handling, clients can expect their content to be showcased accurately and compellingly on the search engine results page.

Monitoring the Impact of Structured Data on SEO Performance

LinkGraph’s SEO services prioritize the meticulous monitoring of structured data’s impact on SEO performance, ensuring that the integration translates to tangible benefits in search rankings and user engagement. This oversight is critical, as it enables the quick detection of any schema-related issues that could undermine a website’s standing on search engine results pages.

Through active analysis of search performance metrics, LinkGraph empowers website owners to understand the effectiveness of structured data in enhancing their online visibility. Such rigorous monitoring plays an essential role in verifying that structured data continues to serve its purpose of attracting and retaining a targeted user base in the fluid ecosystem of digital search.

Conclusion

Navigating the complex terrain of SEO challenges is crucial for any digital presence aiming to achieve and maintain high search rankings.

Recognizing and addressing common SEO issues such as suboptimal site speed, poorly structured content, and duplicate content is imperative.

LinkGraph ensures thorough site audits to reveal these issues and employs strategic tools and adjustments to enhance site indexability, optimize XML sitemaps, and effectively manage robots.txt files for optimal crawling.

Moreover, ongoing attention to SEO changes and misconceptions, such as keyword stuffing and the fallacy of SEO being a one-time task, ensures that a website’s SEO strategy remains sharp and effective.

By leveraging structured data, websites can communicate more clearly with search engines, leading to improved search results and user experiences.

In sum, a proactive and knowledgeable approach to SEO challenges not only rectifies existing problems but also fortifies a website’s future performance on search engine results pages.