The Google Indexing Coverage Report: Get your Web Pages into Google’s Index

After a search engine spider discovers a web page, it crawls and renders the web page’s content and, if allowed, adds the page to Google’s index. Google […]

After a search engine spider discovers a web page, it crawls and renders the web page’s content and, if allowed, adds the page to Google’s index. Google has the largest index of any search engine (between 30-50 billion web pages) and Google’s amazing indexing power has been a key to the search engine’s success over the past two decades.

But indexing the internet is complex. Web pages are constantly being updated, changed, moved, or removed. Google wants to keep its index up-to-date, so it regularly crawls the pages in its index so it knows whether or not to keep them there, remove them, or whether the content has changed and should be promoted for different sets of keywords.

As a result, understanding how Google’s indexing process works, particularly for our individual websites, is an important part of SEO. Google cannot rank your web pages if they are not indexed, so understanding which of your pages are indexed, and why or why not, is important to make sure the most valuable, high-quality, and high-converting pages on your website have the potential to show up in search engine results.

So how do you know whether or not Google has indexed the pages of your website? Enter the Google Indexing Coverage Report in your Google Search Console account.

Taking the time to check the Google indexing status for your site provides you with a comprehensive overview of how Google indexes your website’s pages. This article will outline how to access and understand your Google Indexing Coverage Report, list common indexing issues, and offer detailed suggestions for how to resolve them.

What is the Google Index Coverage Report?

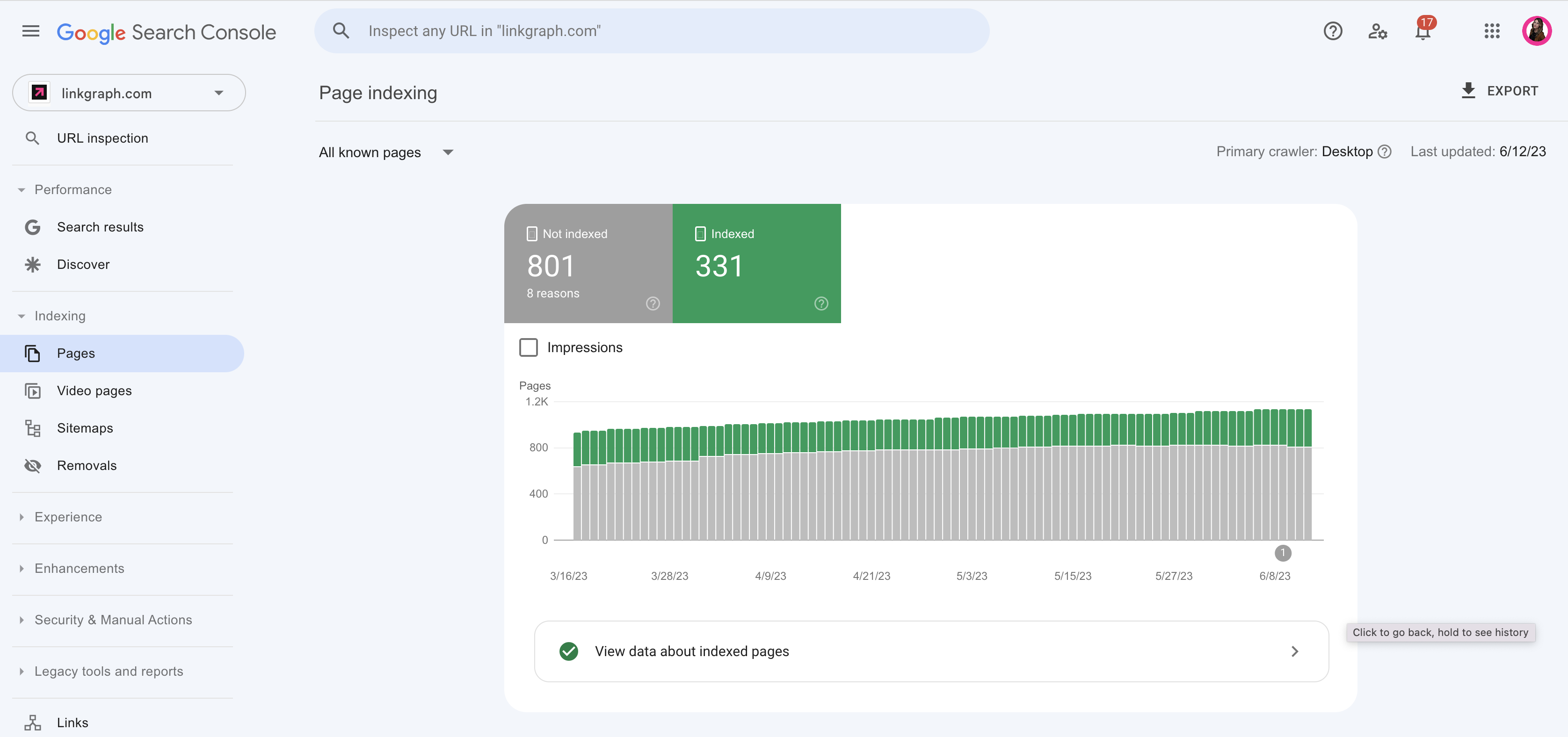

The Google Index Coverage report is a summary of which pages on your website have or have not been indexed and why or why not.

It highlights pages that have been indexed successfully, pages with Google indexing issues, pages that Google has excluded, and pages that have warnings.

The report also includes important information such as the number of indexed pages, crawl issues, and sitemap status. By regularly monitoring the Index Coverage Report, website owners can quickly detect and resolve indexing issues that negatively impact their website’s visibility.

What Should I Use the Google Index Coverage Report For?

Here are a few key ways you can leverage the information you find when you check the indexing status for your site:

Identify indexing issues

When your website has indexing problems, it can hinder crawlers from properly scanning your pages. This can lead to your pages not appearing in search engine results pages (SERPs), thus limiting your website’s visibility.

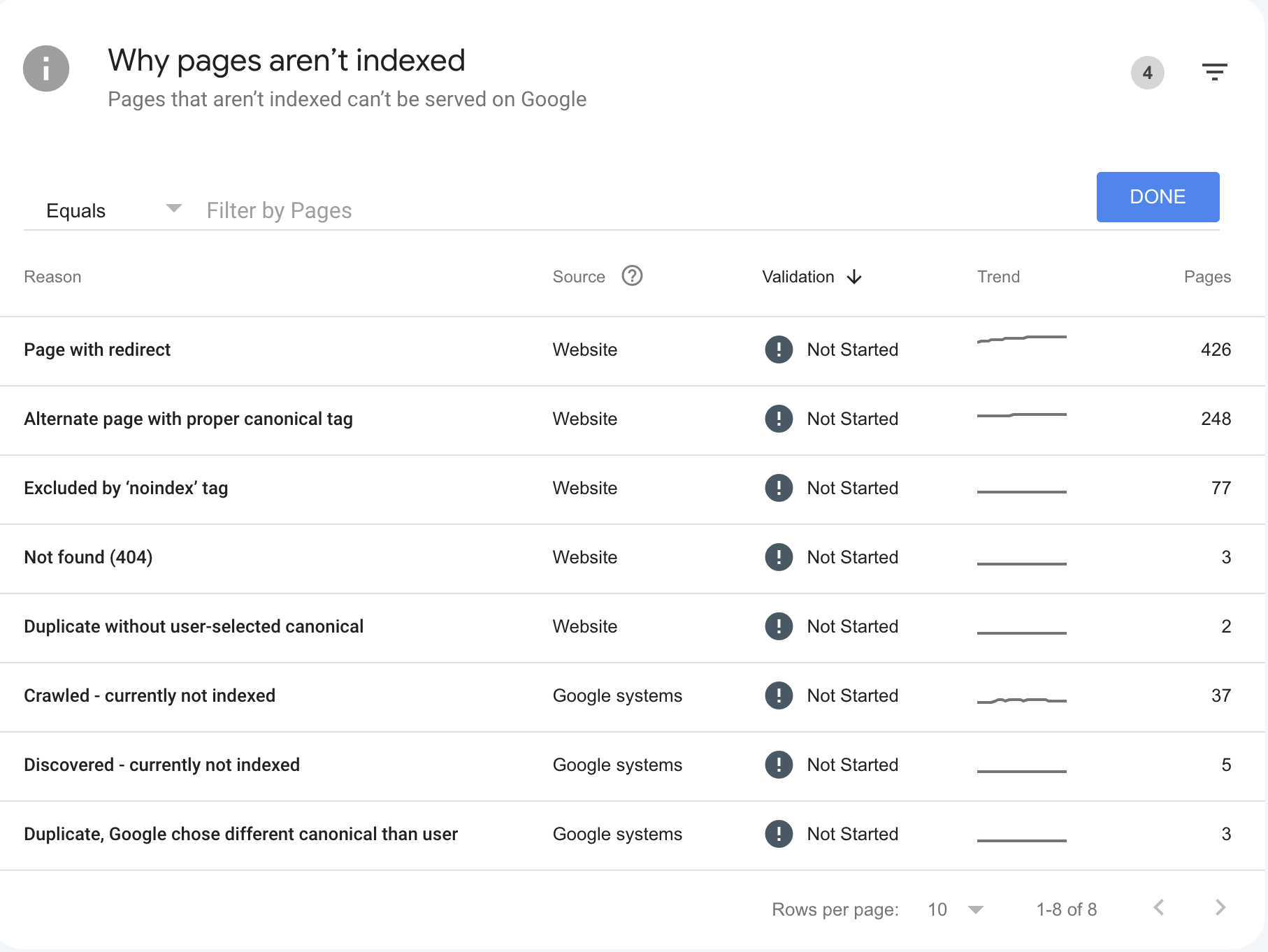

The Google Index Coverage report will offer explanations for why you’re web pages aren’t being indexed. Google categorizes this chart by the various indexing issues as well as a column listing the total number of pages on your website impacted by the issue.

Uncover crawling patterns

As the owner of a website, understanding how Googlebot crawls and interacts with your website is essential for ensuring your site is being efficiently crawled. Google sets a limited crawl budget for each website, and if their crawlers are encountering difficulties in crawling your site due to a poor or convoluted structure, this means you are wasting your budget and delaying the time it will take to get your important pages indexed.

Evaluate page indexing status

This report will help you determine any potential issues and prioritize your optimization efforts. By reviewing the indexing status of each page, you can understand why some pages may not be appearing in the results. The reasons could range from domain name issues and technical hitches to issues related to content and backlinks.

The report classifies indexing status into four categories:

- Valid: Successful and eligible for search results

- Error: Critical issues that need attention

- Excluded: Pages intentionally excluded or blocked by robots.txt

- Valid with warnings: Pages indexed but with minor issues that may affect their visibility or performance

Monitor changes over time

The Google Indexing Coverage Report allows you to see how many of your website’s pages are scanned, and the reasons why specific pages might be returning errors. When you check Google indexing status, you can track improvements in your website and detect emerging Google indexing issues, such as crawl errors or duplicate content.

For example, if you notice a sudden drop in the number of indexed pages, it could indicate that there’s an issue with your website that needs to be addressed.

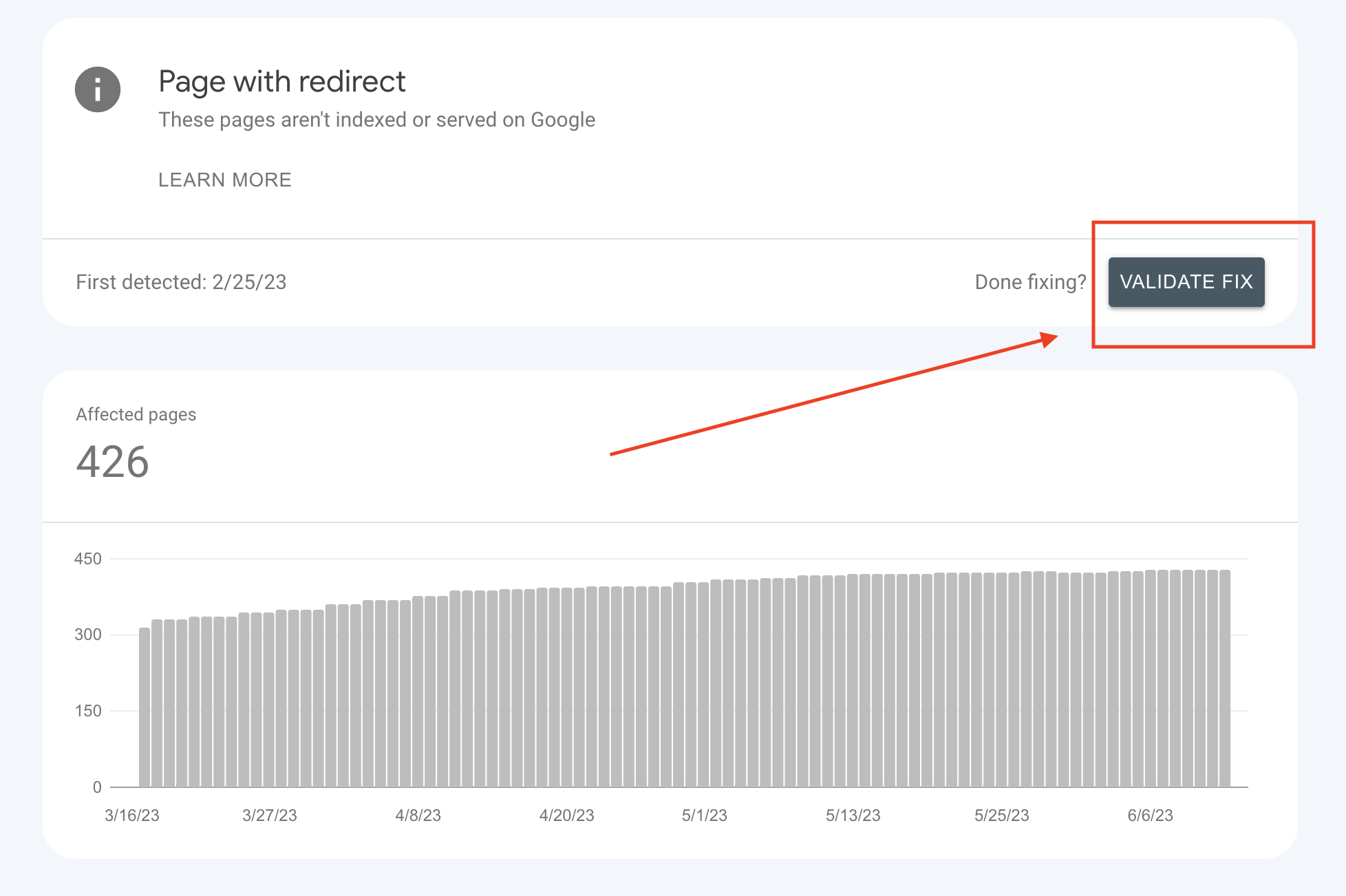

Validate fixes

The Google Index Coverage report also allows you to go through a validation process after you resolve any indexing issues.

After you resolve an indexing issue, click the “validate fix” button and Google will go through the process to confirm whether or not the issue has been resolved.

6 Common Google Indexing Issues and How to Resolve Them

Several common indexing issues can occur, leading to lower search rankings, decreased website traffic, and ultimately, loss of revenue. Fortunately, they aren’t impossible to resolve.

1. Crawl errors

Crawl errors can be a headache for any online business owner or digital marketer. They occur when Googlebot, the site’s crawler, experiences difficulty accessing your website’s pages.

This can happen for numerous reasons, including:

- Server errors

- Excessive redirect chains

- Slow-loading pages

When a crawler encounters these issues, it may not be able to access all of your website’s content, leading to lower rankings and fewer organic results. Remember, Google is not going to wait around forever to crawl and render your content, so make sure your website is high-performing and loading fast for users and Google.

2. Soft 404 errors

These errors occur when a page that should return a “404 Not Found” https status code, indicating that the requested page does not exist, is incorrectly identified as a valid page.

This can happen if your website returns a standard 200 status code instead, which suggests that the page does exist. The result is confusing for users who are expecting to see a “404 Not Found” error message.

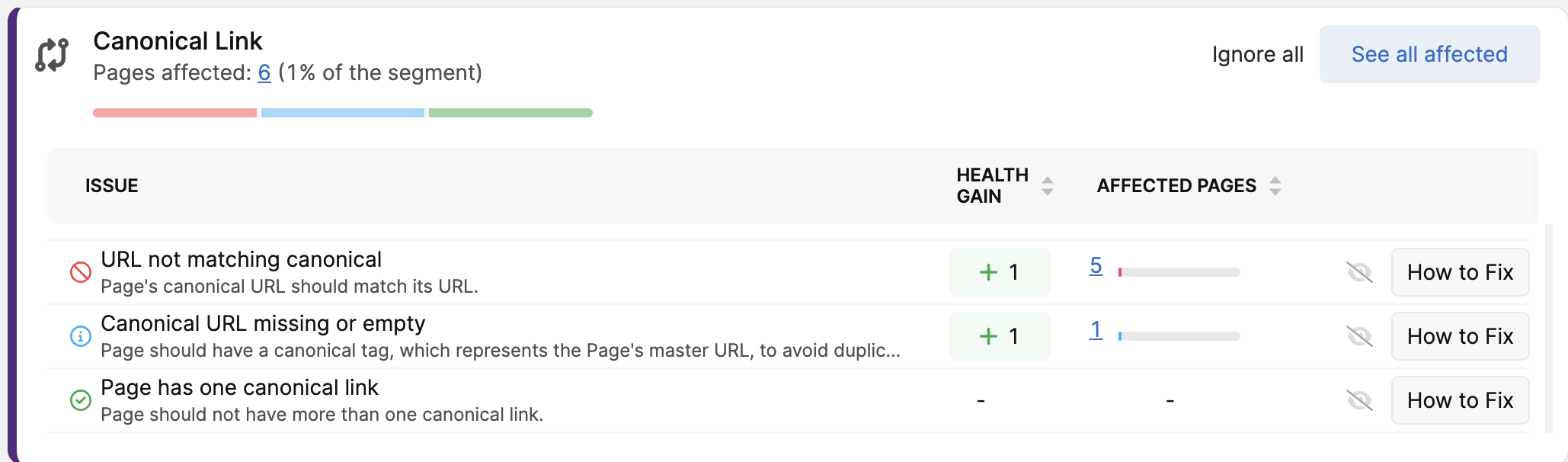

3. Duplicate content

Duplicate content can cause another type of common Google Indexing Coverage Report error you might find when you check Google indexing status for your site.

When multiple pages on your website have similar or identical content and do not have proper canonical tags, it can confuse search tools and dilute the visibility of each page. Search engines aim to provide the best user experience, and showing multiple pages with the same content could be confusing and frustrating for the user.

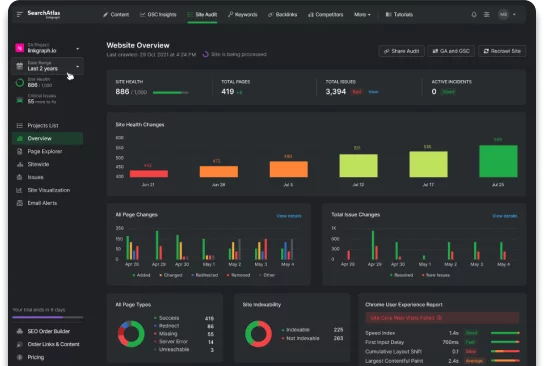

If you’re a Search Atlas user, duplicate content and improper use of canonical tags will be flagged in your site audit report:

With detailed how-to-fix guides, you can resolve this issue quickly and make sure it does not prevent your content from showing up in search results.

4. Blocked resources

Blocked resources refer to the files on your website that are restricted to crawlers like Googlebot. These may include JavaScript and CSS files, which are essential in rendering a web page accurately. If the web crawlers cannot access these files, they may struggle to interpret your site’s elements, leading to incomplete rendering and Google indexing issues for your site.

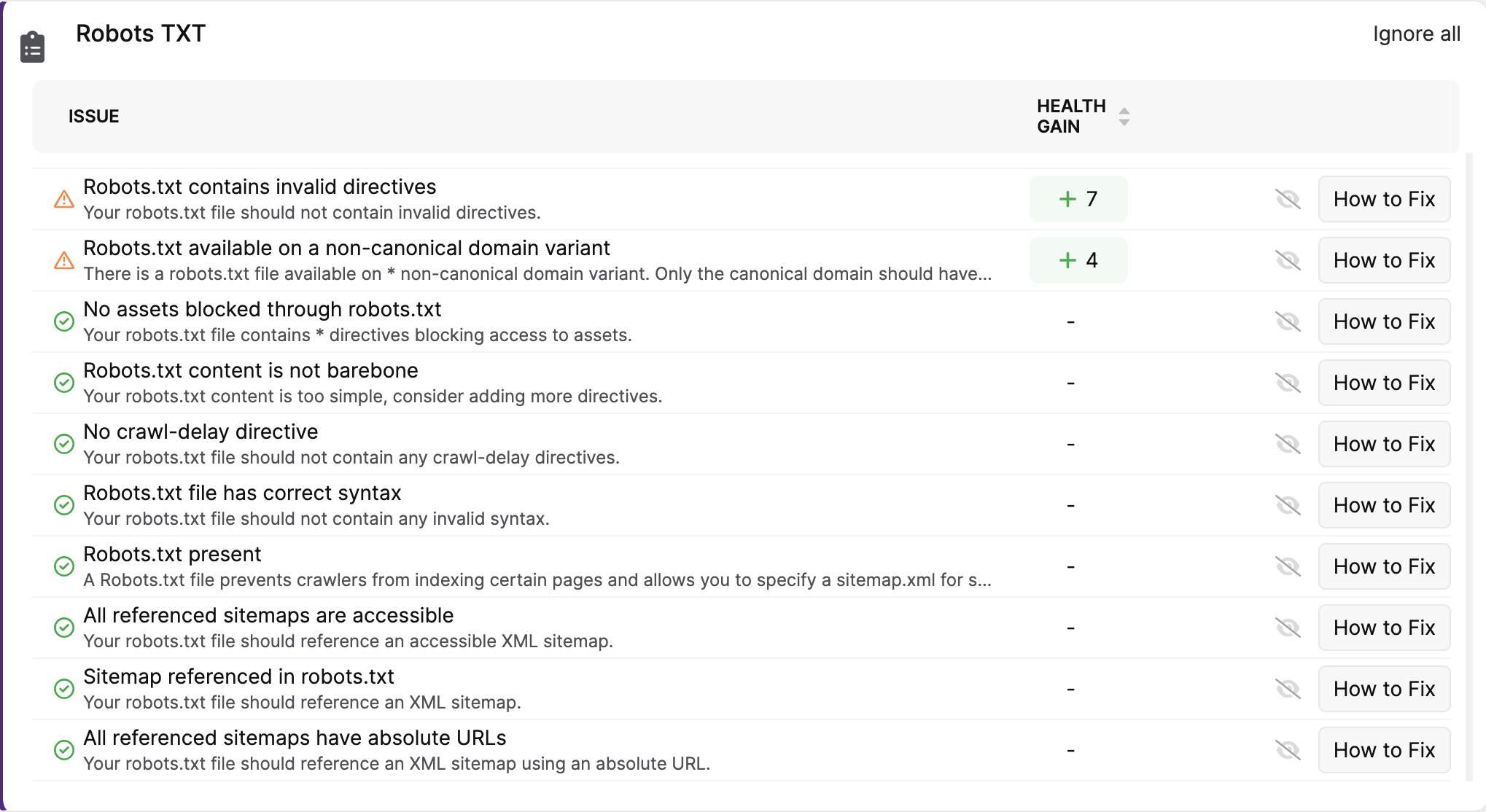

5. Robotx.txt and invalid directives

Not all of our web pages need to be in Google’s index, particularly content like “Thank you” or confirmation pages that are shown to users after a purchase or a submission form. Webmasters use robots.txt files and robot directives like “noindex” to tell Google which pages they should not include in their index.

However, issues often arise in the implementation of robots.txt or robot tags on individual pages. For example, if directives on an individual page conflict with the directives identified in the robots.txt, Google will follow those directives in the robots.txt.

These issues are also going to be identified in your site audit report if they are present on your website.

6. Sitemap errors

Issues with XML sitemaps can also lead to indexing problems. This sitemap acts as a roadmap for search bots, pointing them toward all the essential pages on your website. However, if your sitemap contains errors or is outdated, it can impede proper analysis by search engines, leading to reduced visibility and lower search ranks.

Follow Our Tips for Better Indexing

Identifying these common issues within the Google Indexing Coverage Report and taking steps to resolve them is key to making sure that the pages you want to be indexed are, and quickly.

Remember, just because a web page is added to Google’s index doesn’t mean it is guaranteed to rank. Getting Google to index your web pages is just the first step in SEO, and it will take comprehensive SEO work to reach your target audience effectively.

If you need assistance with technical SEO issues like the above or with improving the quality of your content and backlinks, LinkGraph is here to help! Reach out and book a free strategy session with one of our SEO consultants.