Common Javascript SEO Issues and How to Fix Them

As SEO is becoming an increasingly important factor for success, developers need to understand the common issues that can arise when coding with JavaScript (JS). Unfortunately, many […]

As SEO is becoming an increasingly important factor for success, developers need to understand the common issues that can arise when coding with JavaScript (JS). Unfortunately, many developers struggle to ensure that their JavaScript-based sites are properly optimized for search engine visibility. Common mistakes can range from missing meta tags to slow page loading speeds, and these issues can make all the difference in how well a website is ranked on search engine results pages (SERPs). Keep reading to learn more about JavaScript SEO as well as how to address any issues.

What is JavaScript SEO?

JavaScript SEO is a type of technical SEO that’s focused on JavaScript optimization. JS is a popular programming language that allows developers to create interactive websites, applications, and mobile experiences.

While Javascript is a powerful tool for creating great user experiences, it can also cause issues for search engines when done incorrectly. JavaScript websites can also be heavy on page load and performance, which reduces their functionality and negatively affects the user experience.

How Does JavaScript Impact SEO?

JavaScript directly impacts technical SEO because it affects a website’s functionality. It can have a negative impact on rendering or enhance site speed. Incorrect implementation of JavaScript content can be detrimental to your website’s visibility.

Here are some of the main on-page elements that affect search engine optimization:

- Page load times

- Metadata

- Links

- Rendered content

- Lazy-loading images

To rank higher on SERPs, JavaScript content must be optimized for crawling, rendering, and indexing. For Google and other search engines to fully index a website, they need to be able to access and crawl its content.

JavaScript and SEO

JavaScript, however, can present issues for crawlers. Some of the primary issues include:

- Javascript makes it harder for crawlers to render and understand content: Because Javascript is a dynamic language that requires extra resources to interpret and execute, search engine crawlers can sometimes fail to properly understand or access the content on a page. As a result, they are unable to index it.

- Too much Javascript impacts load times: A web page that contains too much JavaScript or very large JS files can take longer to load. In addition to lower rankings, slow loading times can even lead to an increase in bounce rate, as users will be more likely to leave a website if it takes too long to load.

- JavaScript can block content from search engine crawlers: The code can be used to hide or limit the content that’s visible to search engines, which can prevent important pages from being indexed and ranked. This is known as cloaking and can lead to severe penalties from search engines. It’s vital that you don’t block access to resources. Googlebot needs this to render pages correctly.

Overall, JavaScript SEO requires troubleshooting and diagnosing any ranking issues as well as ensuring that web pages are discoverable through high-quality internal links for web pages to rank higher. This type of technical SEO involves streamlining the user experience on a webpage and improving page load times since both factors directly affect SERPs.

How Do I Know If My Website Uses JavaScript?

To determine if your website is using JavaScript, you can use a few different methods.

The most accurate way is to open the developer tools and view the source code of the website. To do this, you can simply right-click on any part of the web page and select “view source” or “view page source.” This will open a new window with the source code of the website. Then, press Ctrl + F and search for “javascript”, or look for any lines of code or any code snippets that mention javascript:

Another way to determine if a website uses JavaScript is to inspect the website’s elements. If the interface is interactive and responds to user input, this is a strong indication that the website is using JavaScript. Here are some key elements that you can look for:

- Drop-down menus

- Fly-out menus

- Dynamic content

- Pop-ups

- Interactive elements

If you see these types of features on your website, then it’s likely that JavaScript is being used. Lastly, if a website is using a content management system (CMS) such as WordPress or Joomla, JavaScript is likely being used.

How Does Google Handle JavaScript?

Google handles JavaScript by processing the JavaScript code and rendering the content that’s visible to the user. Google’s crawler can access the page’s Document Object Model (DOM) tree and process the code to determine what content is visible.

Here are the three main steps on how Google handles a webpage and how it processes JS:

- Crawling: First, the Googlebot crawls the URLs for every web page. It makes a request to the server, and the server sends the HTML document.

- Rendering: Googlebot then decides what is necessary to render the main content.

- Indexing: After it has identified what is necessary to render the content, Googlebot can then index the HTML.

But how does Google execute this process? For starters, any unexecuted resources have to be processed by Google’s Web Rendering Services (WRS). Googlebot is more likely to defer rendering any JavaScript until later. Moreover, Google will also only index rendered HTML once JavaScript is executed.

Overall, Google has been able to successfully crawl and index JavaScript for many years, including over 130 trillion web pages. However, there are still common Javascript issues that can arise.

Content that’s entirely dependent on JS may experience a delay in crawling since Googlebot has a crawl budget. This crawl budget is the rate limit that affects how often the bot can crawl a new page. Another hurdle with a lot of JavaScript has to do with the WRS. There is no guarantee that Google will actually execute the JS code that’s in the Web Rendering Service queue. That’s why it’s important to follow best practices when it comes to JavaScript SEO.

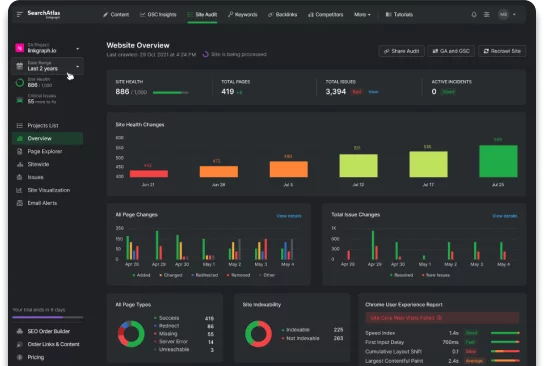

Why Does the Site Auditor Check for JavaScript?

If you are running a report in the Search Atlas site auditor, you may have pages in your report that are flagged due to Javascript.

Because page performance is so important to ranking, the Search Atlas site auditor will flag pages with extra large Javascript files that are slowing down load times and responsiveness.

Common JavaScript SEO Issues

Poorly written or implemented JavaScript can interfere with a search engine’s ability to crawl and index a website, resulting in pages not appearing in search results as expected. This can lead to a decline in organic traffic, making it harder for businesses to reach their target audience.

Some common JavaScript SEO issues include the following type of issues:

- Indexing problems: These can occur if JavaScript is not properly implemented. Search engine crawlers need to be able to access the source code of a website to determine its content and relevance. If JavaScript is not properly configured, crawlers may not be able to access the content and the website may not be indexed.

- Content duplication: This can occur when the same content is being rendered by both the server-side and client-side code. This can lead to duplicate content being indexed by search engines, which can lead to a penalty. It’s critical to ensure that the content is unique and that there is no duplication.

- Slow loading speeds: JavaScript code can be bulky and can slow down the loading speed of a website. Search engines consider loading speed as a factor in their ranking algorithm, so websites with slow loading speeds may not rank as well as those with fast loading speeds.

- Crawlability: Search engine crawlers need to be able to access the source code of a website to index it. If the code is written in such a way that crawlers cannot access it, then the website may not be indexed. This can result in poor rankings and can prevent the website from appearing in organic search results.

How to Fix Common JavaScript Issues

To optimize your JavaScript files for SEO, you can fix the following issues that are common with JS:

Indexing

When it comes to JavaScript SEO, one of the most important aspects to consider is the structure of your source code.

If you’re using JavaScript, it’s paramount to ensure the code is well-structured and organized. This means:

- Code properly formatted

- Unnecessary characters removed

- External scripts should be properly linked

- Minimize the amount of JS used

Content Duplication

To prevent content duplication, webmasters should ensure that each page is served with a unique URL and that dynamic loading is used sparingly.

Sometimes, content duplication can also be caused by third-party services. When a website uses external scripts, such as social media widgets, they can cause the same content to be loaded multiple times.

To prevent this JavaScript SEO issue, webmasters should ensure that external services are loaded asynchronously and that the content is not being re-used across multiple pages.

Slow Loading Speeds

There are a few common ways to address slow speeds. They include:

- Use the latest version of the language (as well as any additional libraries that may be needed)

- Make use of minification techniques to ensure the JavaScript code is as small as possible

- JavaScript code should be properly organized

- Separate code into small, manageable chunks, and use appropriate naming conventions

- You should also use variable names that are relevant to the code they’re used in. This can help reduce clutter and allow for easier navigation

- Ensure any extra resources that are being loaded with the JavaScript are being properly cached. Caching can help reduce the number of requests that need to be made to the server and can help reduce the amount of data that needs to be loaded overall.

Crawlability

To improve crawlability, it’s best to use progressive enhancement when developing a website. This ensures that all of the content is accessible to search engine crawlers without relying on JavaScript.

Secondly, it’s vital to ensure all JavaScript is minified and compressed. This can help reduce the amount of time that it takes for the crawlers to read and index the content. It’s also important to use a content delivery network (CDN) to ensure all of the content is served quickly and efficiently to search engine crawlers. These steps can help improve crawlability and ensure that search engine results are accurate and up to date.

Conclusion

Taking the time to optimize JavaScript for SEO can help improve the organic visibility of a website. If you need assistance, make sure to book a meeting with one of our technical SEO experts to learn how LinkGraph can help you optimize your web pages for better SERP performance.