Multivariate vs. AB Testing

Understanding the Differences: Multivariate vs. AB Testing In the constantly evolving landscape of digital marketing, understanding user behavior is key to optimizing conversion rates and enhancing the […]

Understanding the Differences: Multivariate vs. AB Testing

In the constantly evolving landscape of digital marketing, understanding user behavior is key to optimizing conversion rates and enhancing the customer experience.

Multivariate testing and AB testing are two potent methods that marketers employ to gauge the performance of various web page elements and pinpoint what resonates best with visitors.

While multivariate tests dissect page designs to understand how different elements interact, AB testing compares distinct page versions altogether.

Each method has distinct advantages and intricate nuances that can dramatically impact the trajectory of a digital marketing strategy.

Keep reading as we unpack the intricacies and nuances of multivariate versus AB testing, empowering you to make informed decisions for your campaigns.

Key Takeaways

- Multivariate Testing Analyzes Multiple Variables on a Web Page to Understand Their Collective Impact on User Behavior

- AB Testing Focuses on a Single Variable Change to Determine Its Direct Effect on User Engagement and Conversion Rates

- Multivariate Testing Requires High Traffic Volumes and Resources to Be Effective Due to Its Complexity

- AB Testing Is Suited for Quick, Actionable Results and Can Work With Lower Traffic Volumes and Fewer Resources

- Both Testing Methods Are Critical in Digital Marketing for Optimizing User Experience and Maximizing Conversions

Multivariate Testing Explained

Within the realm of digital marketing, a clear understanding of various website optimization strategies is key for fostering significant improvements in user experience and conversion rates.

Multivariate testing stands out as a sophisticated method to discern the effectiveness of page design elements and their combined impact on visitor behavior.

Unlike its counterpart, AB testing, which compares two contrasting versions of a single page, multivariate testing delves deeper.

It subtly dissects and evaluates multiple variables simultaneously to inform product managers, marketers, and developers about interaction effects and how these influence customer decisions.

By exploring this testing approach, organizations gain insights into the nuances of user engagement, thereby empowering them to craft a more compelling and tailored customer journey.

This subsection aims to clarify multivariate testing, detailing its core components, appropriate application scenarios, inherent advantages, and potential constraints, thus equipping digital professionals with a robust framework for tactical site optimization.

Defining Multivariate Testing in Simple Terms

Multivariate testing, commonly referred to as MVT, is essentially a technique that allows marketers and developers to simultaneously test multiple variables on a web page. By changing various elements like headlines, images, and call-to-action (CTA) buttons, MVT provides a comprehensive overview of how each component interacts and influences the overall user experience and conversion rate.

This method evaluates the performance of different permutations of page designs, informing stakeholders about which combination resonates best with visitors. It transcends the simplicity of A/B testing by offering richer data, enabling organizations to fine-tune their web pages with precision and thus enhance User Engagement and Satisfaction.

Key Components of Multivariate Testing

At the core of multivariate testing are key components that ensure its effectiveness as a testing method. Central to this approach are the variables, which are the distinct page elements such as images, CTAs, and text, that one seeks to optimize.

Each variable undergoes variations to reveal how they collectively influence user behavior and website performance metrics like bounce rate or conversion rate optimization. This process relies on a robust sample size and advanced statistical analysis, which aid in identifying the most impactful design changes:

- Identification and creation of variables for testing.

- Development of variations for each variable.

- Selection of a statistically significant sample size.

- Execution of the test across a controlled environment.

- Employment of sophisticated analytics for data interpretation.

- Application of test results to optimize the overall page design.

When to Use Multivariate Testing

Multivariate testing is ideally employed when digital marketing professionals are faced with complex website layouts and a multitude of interactive elements whose effects on user behavior must be understood in tandem. It is particularly useful for intricate pages, like e-commerce product pages or landing pages with various calls to action, where the synergistic effect of multiple design elements on conversion rates can be profound.

Moreover, this testing strategy is highly beneficial for digital teams that have the capacity to attract sufficient website traffic that yields robust data. Multivariate testing requires significant traffic to achieve statistical relevance, making it a prime choice for high-traffic sites seeking granular insights into how visitors interact with a page and what combinations drive the highest conversions.

Multivariate Testing Strengths

Multivariate testing shines in its ability to provide intricate data about the collective impact of complex page elements on user experience. Its strengths lie in the depth of insights gleaned from the interaction between variables, offering clear direction for incremental design improvements that can lead to significant uplifts in engagement and conversion.

Unlike simpler methods, multivariate testing harnesses the power of factorial analysis to scrutinize the performance of myriad component combinations, thereby enabling organizations to pinpoint the most effective version of a page. This capacity for Nuanced Optimization positions it as an invaluable tool for marketers intent on refining web pages to resonate with the nuances of customer preferences.

Multivariate Testing Limitations

One of the primary limitations of multivariate testing is its necessity for high volumes of traffic to achieve statistical significance. Given that the method evaluates numerous combinations, a significant number of visitors is required to discern clear winners from the vast array of tested variables:

- Accumulation of adequate traffic to ensure each variation reaches a valid sample size.

- Duration of the test may extend over long periods to accumulate necessary data.

Additionally, multivariate testing can be resource-intensive, demanding considerable involvement from both the technical and creative teams. The development and deployment of numerous test variations often require substantial time and effort, which can strain an organization’s operational capacity and delay the optimization process.

AB Testing and Its Fundamentals

Amidst the diverse tactics of website optimization, AB testing emerges as a cornerstone of digital marketing, held in high contrast to the intricate multivariate approach.

Characterized by its comparative simplicity, AB testing involves the measured experimentation of two distinct page versions to gauge the response of visitor engagement and conversions.

This streamlined method allows marketers and product teams to establish a baseline understanding of user experience decisions, serving as the gateway to more granular examinations of customer interactions.

As digital professionals tailor their strategies, AB testing provides a foundation for incremental enhancement, from the seamless integration of content to the deployment of targeted calls to action.

This section delves into the fabric of AB testing, from its fundamental concepts to scenarios ideal for its application, and weighs its distinct advantages against the limitations that accompany its design.

The Concept of AB Testing

In stark contrast to the complex nature of multivariate testing, AB testing provides a straightforward approach: one element is modified across two versions of a web page to see which performs better. This method simplifies the process of optimization by isolating a single variable, making it easier to attribute changes in user behavior directly to that variable.

As a result, AB testing serves as an accessible entry point for organizations aiming to enhance their digital presence while maintaining a clear line of sight on the cause-and-effect relationship within their experimentation. It stands as a preferred starting point for conversion rate optimization, particularly when deciding on the initial design directions.

| Testing Method | Complexity | Variables Tested | Preferred Scenario |

|---|---|---|---|

| Multivariate Testing | High | Multiple | High-traffic pages with many interactive elements |

| AB Testing | Low | Single | Initial optimization, simpler page layouts |

Core Elements of AB Testing

Core Elements of AB Testing pivot around the crucial steps of isolating and analyzing a single change to discern its effect on user actions. This reductionist approach enables marketers and developers to pinpoint the influence of individual page components on visitor behavior, offering clarity on how adjustments in page layout or copy can elevate conversion rates.

In essence, the AB testing framework prescribes a controlled experiment where two variants, typically the original and the modified version, are exposed to similar audience segments. The comparative data harvested from these parallel streams provides actionable intelligence, guiding teams in their pursuit to optimize digital strategies for enhanced user experience and business outcomes.

Scenarios Ideal for AB Testing

AB testing finds its sweet spot within the digital landscape when simplicity is paramount and swift actionable results are sought. Ideal for assessing the efficacy of singular design changes or new feature implementations, it provides straightforward insights invaluable to novices and experienced marketers alike converging on optimized digital marketing solutions, particularly when time is of the essence.

Navigating the terrain of less complex web pages, AB testing offers a practical approach for brands to evaluate modifications that directly affect user experience, such as headline optimization or color scheme adjustments on their landing pages. It’s especially advantageous when initial hypotheses require validation before embarking on more comprehensive testing or when resources are limited, ensuring that customer touchpoints are effectively tuned to enhance engagement and drive conversions.

Advantages of AB Testing

AB testing is applauded for its precision and simplicity, making it an exceedingly practical tool for evaluating the direct impact of singular changes on user experience. It offers marketers clear, concise feedback on the vitality of specific design or content adjustments, enabling swift decision-making and implementation.

Furthermore, by removing the complexity inherent in testing multiple variables, AB testing mitigates the risk of confounding results, ensuring that data analytics lead to accurate interpretations of customer behavior. This direct cause-and-effect understanding furnishes marketers with the confidence to make informed adjustments that can lead to improved conversion rates and overall website performance.

Potential Drawbacks of AB Testing

One significant limitation of AB testing lies in its singularity of focus which can obscure the broader picture of customer experience. As it evaluates the impact of a single change, this method may overlook the interplay of multiple elements that collectively influence visitor behavior and conversion outcomes.

Additionally, while AB testing can be cost-effective for iterative design tweaks, it may lead to incremental gains that fail to capture the full potential of a more comprehensive, data-driven optimization strategy. Its reliance on simpler variables means that AB testing is less suited for complex scenarios where multiple factors dictate the success of a digital experience.

| Testing Method | Drawbacks |

|---|---|

| Multivariate Testing | Requires high traffic for statistical significance; resource-intensive analysis. |

| AB Testing | Limited view of customer experience; may yield only incremental gains. |

Dissecting the Testing Process

Deploying the right testing method is crucial in the dynamic field of digital marketing, where understanding and influencing user behavior can dramatically sway the success of online strategies.

When marketers contemplate the most effective way to refine their digital touchpoints, they often weigh the merits of multivariate versus AB testing.

This subsection will segue into an exploration of specific test workflows, offering a candid look at the distinctions and complementarities between the processes of each testing method.

We will guide readers through formulating clear objectives, assessing critical data points, and mapping the sequence of actions that shape the test execution and analysis, ensuring that their digital marketing solutions are both data-informed and result-oriented.

Breaking Down the Multivariate Test Workflow

The multivariate testing workflow begins with the meticulous selection of variables, which involves pinpointing and altering multiple design elements such as graphics, headlines, and button colors. Armed with a hypothesis about how these factors might interact to affect user experience and conversion rates, marketing teams proceed to develop numerous permutations to test against the original page layout.

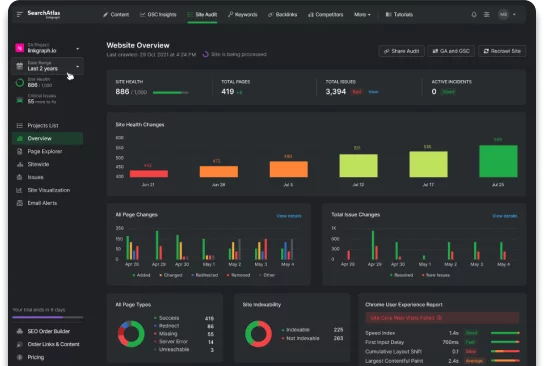

Following the setup, the testing phase ensues, leveraging sophisticated SearchAtlas SEO software to track user interactions across different page variations. This period is critical in gathering actionable data, with a strong emphasis put on ensuring enough website traffic flows through each variation to render the test results statistically significant and reliable for informed decision-making.

Step-by-Step Guide to Conducting AB Tests

Embarking on AB testing begins with the identification of a singular page element to modify, thus setting the stage for a controlled experiment: a direct comparison between the original version and the altered one. With the variations crafted, digital marketers circulate both versions to a comparable audience segment, meticulously documenting the performance indicators that reflect user engagement and conversion rates.

Upon collecting sufficient data, the iterative process of refining the digital asset progresses to analyzing the collected information. The robust digital marketing solutions offered by SearchAtlas provide users the capacity to observe real-time results, enabling swift and data-driven decisions that propel targeted optimizations in their marketing strategies.

| Step | Action | Outcome |

|---|---|---|

| 1 | Select a Page Element | Define variable for AB testing |

| 2 | Create Variations | Produce original and modified versions for comparison |

| 3 | Deploy to Audience | Expose each version to a segment of users |

| 4 | Gather and Analyze Data | Use results to make informed decisions on page optimization |

How Test Objectives Shape the Process

The overarching objectives of a test are paramount, directly influencing the selection of either multivariate or AB testing as the more suitable method. For instance, when a marketing team’s primary aim is to thoroughly understand the collective impact of multiple design elements, multivariate testing is the natural choice, guiding the process towards a comprehensive analysis of complex interactions.

Conversely, if the goal is to swiftly ascertain the effectiveness of a single change, AB testing offers a streamlined pathway to results. This focus on simplicity shapes the testing process into a more singular, targeted endeavor, ideally suited for rapid performance evaluation and implementation of findings:

| Objective | Selection Criteria | Chosen Method |

|---|---|---|

| Detailed Interaction Analysis | Complex page design with numerous variables | Multivariate Testing |

| Single Change Evaluation | Need for quick, clear, actionable results | AB Testing |

Assessment Points During Testing

Crucial to the efficacy of both multivariate and AB testing is the identification and monitoring of key assessment points. These benchmarks, often pre-established before test commencement, serve as barometers for measuring user reaction to different website elements, with an eye toward optimizing the factors that most substantially shape visitor behavior and conversion rates.

In the thick of the testing process, ongoing analysis of metrics like bounce rate, click-through rate, and conversion rate provides a continuous stream of data. Marketers and developers, vigilant in their scrutiny, adeptly adjust these assessment points when necessary, ensuring alignment with evolving user patterns and maintaining the integrity of the test’s strategic intent.

The Role of Traffic Volume in Testing

In the intricate dance of online optimization, the volume of website traffic plays a pivotal role, asserting significant influence over the efficacy of both multivariate and AB testing methodologies.

Digital marketing professionals must be cognizant of how visitor numbers can shape the reliability of test results, with an acute awareness that the robustness of traffic directly underpins the statistical validity of the insights obtained.

As such, striking an optimal balance between the available traffic and the aims of a given test is paramount in achieving meaningful data that lead to actionable optimizations.

This introduction sets the stage for a deeper dive into the implications of traffic on testing strategies, examining its impact on multivariate testing, comprehension of traffic prerequisites for AB testing, and the art of aligning traffic volume with test objectives to ensure the successful refinement of digital assets.

Impact of Traffic on Multivariate Testing

The influence of traffic volume on multivariate testing is a crucial consideration for digital marketers. High traffic levels are imperative, as they offer a broad dataset that ensures the statistical accuracy of testing multiple design elements and their combinations simultaneously. Without substantial traffic, obtaining significant results from the complex interactions of variables remains elusive.

For multivariate tests to yield meaningful insights, the amount of visitor data must be sufficient to detect the nuances of user preferences and behavior changes caused by the varied page elements tested. This demand for high traffic underscores the importance of multivariate testing for established sites with robust visitor numbers, where detailed analytical outcomes can truly drive informed digital marketing solutions.

Understanding Traffic Requirements for AB Testing

AB testing hinges on the principle of simplicity but still requires an adequate influx of website traffic to substantiate its conclusions. Without a steady flow of users engaging with the tested variants, discerning the more effective page design remains speculative, underscoring why even the most straightforward AB testing demands a baseline amount of traffic to ensure reliability.

Digital marketing strategies that incorporate AB testing must consider the traffic threshold essential for robust test outcomes. Ensuring a sufficient volume of visitor data is the linchpin for observing meaningful behavioral patterns, ultimately informing the optimization decisions and cultivating a more effective user experience.

Balancing Traffic and Test Goals

In the intricate sphere of digital marketing tests, aligning traffic volume with specific testing objectives is a delicate art. Marketers adeptly orchestrate this balance, acknowledging that the robustness of multivariate testing hinges on substantial traffic, while AB testing requires sufficient volume to validate changes influenced by a single variable. Their strategies are calculated, ensuring that each testing method is applied in a context that reflects not only the immediate goals but also the realistic traffic conditions of their digital domains.

Professionals responsible for website optimization closely monitor traffic flows to ensure that the chosen testing method will provide reliable insights. They recognize that aligning high-traffic volumes with multivariate testing allows for a deeper dive into how different variables interact, while with AB testing, the clarity in results from a moderate traffic stream enables rapid implementation of enhancements in user experience. This strategic balance fosters a more precise and data-driven marketing approach, ultimately guiding customer-centric growth.

Data Complexity in Multivariate vs. AB Testing

Discerning the effectiveness of various page elements to enhance user experience and conversion lies at the heart of both multivariate and AB testing, yet the intricacy of data involved in each method varies greatly.

Multivariate testing involves a deeper engagement with data complexity due to the simultaneous analysis of multiple variables, while AB testing streamlines this process by focusing on the impact of a singular change.

As digital marketing professionals navigate through the vast seas of data these tests generate, specialized tools become indispensable assets in managing the intricacies of data analysis, demystifying the process, and unlocking actionable insights.

This comprehensive understanding of data complexity in both testing methods is pivotal for refining strategic decision-making and optimizing digital marketing campaigns with precision.

Data Analysis in Multivariate Testing

Data analysis in multivariate testing is a comprehensive exercise that requires attention to detail and a nuanced understanding of statistical significance. The efficacy of this method hinges on the organization’s ability to concurrently scrutinize several variables, hence demanding a robust analytical framework to interpret complex interactions and their resultant impact on conversion rates and user experience.

Utilizing advanced algorithms, the multivariate testing approach deciphers the collective influence of page design adjustments, enabling marketers to distill a wealth of data into strategic improvements. This type of analysis serves as the bedrock for rigorous and Methodical Optimization, guiding digital professionals as they refine their digital marketing solutions to align effectively with user behavior and preferences.

Simplifying Data Interpretation in AB Testing

In AB testing, data interpretation is streamlined due to the nature of evaluating a single change on a user’s experience. This focused analysis allows for a clear-cut understanding of how variations in one element influence metrics like conversion rates, effectively removing extraneous variables that could complicate the data.

By honing in on one factor, AB testing aids digital marketing professionals in quickly identifying successful adjustments that can enhance user engagement. The simplified dataset not only expedites the analysis process but also facilitates more efficient iterations and optimizations of the tested element.

Tools to Handle Data Complexity

To tackle the intricacies of data stemming from sophisticated multivariate testing or the more straightforward AB testing, digital marketing professionals turn to specialized software like SearchAtlas. This SEO software streamlines the analysis phase by efficiently organizing complex datasets, allowing marketers and product teams to extract meaningful insights and drive strategic decisions.

SEO Content Assistant and content planner tools, integral components of SearchAtlas, provide tailored solutions that simplify the manipulation of extensive data. These resources bolster digital marketing strategies by enhancing the capability to evaluate the interaction of numerous page elements or to pinpoint the effectiveness of singular changes, thus enabling a data-backed refinement of the user experience.

Duration Comparison for Test Validity

Embarking on a journey through the labyrinthine pathways of website testing bears the question of duration—how long must an organization wait before it can harvest reliable, actionable data?

Time becomes a pivotal factor when considering the intricacies of Multivariate Analysis and the streamlined process of AB Testing.

Professionals in digital marketing circles must accurately estimate the length of time required for each testing method to ensure validity in their experimental results.

The forthcoming discussion will shed light on the temporal aspects that govern both testing strategies, offering clarity on estimating multivariate test durations and timing AB tests for optimum accuracy.

Estimating Test Duration for Multivariate Analysis

When embarking on multivariate testing, practitioners must establish a realistic timeline that accounts for the complexity of evaluating multiple page elements simultaneously. Given the nature of this testing method, which requires ample data to ascertain the collective impact of variables, test durations typically extend over a longer horizon than AB testing, with the period determined by a combination of traffic volume, number of variations, and the stability of test results.

Calculating the duration for multivariate analysis involves anticipating the time needed to collect a robust set of data across all variant combinations. Marketers must ensure each permutation receives sufficient exposure, allowing the nuanced effects of variable interactions to emerge statistically. Adequate test lengths safeguard against premature conclusions, endowing digital strategists with the confidence to implement data-driven optimizations backed by comprehensive analysis.

Timing Your AB Tests for Accurate Results

In the context of AB testing, precise timing is crucial to ensure the collection of results that truly reflect the impact of the tested variable on user experience and conversion rate. Digital marketers must therefore calibrate the length of AB tests, taking into account factors like the stability of website traffic and the sensitivity of the variable being tested, ensuring sufficient duration for a clear determination of outcome efficacy.

Key to the accuracy of AB testing results is the alignment of test duration with visitor traffic patterns and the anticipated effect size of the change. A fixed duration may not always suffice; it is incumbent upon marketing teams to dynamically monitor progress and response rates throughout the test period, prolonging or concluding the experiment based on real-time data insights to validate the reliability of findings.

The Statistical Significance in Both Test Types

Grasping the concept of statistical significance is fundamental when dissecting the effectiveness of website optimization through testing methods.

Both multivariate and AB testing rely on statistical significance to rationalize which variations genuinely improve user experience and conversion rates.

As digital marketing solutions increasingly depend on data-driven decisions, a nuanced comprehension of significance levels becomes crucial for validating test outcomes.

The following discussion will delve into the intricacies of establishing significance levels in multivariate testing, and the pursuit of achieving statistical relevance in AB tests, both of which are cornerstones for meaningful interpretation and strategic application of results.

Significance Levels in Multivariate Testing

In multivariate testing, significance levels determine the confidence in asserting that observed differences in conversions or other key performance indicators are not due to random chance. This statistical rigor ensures that when a particular combination of variables outperforms others, digital marketers can trust that the success is likely attributable to the tested elements and not mere fluctuations in visitor behavior.

The computation of these levels involves a complex interplay between the number of variations tested, the sample size of visitors, and the observed effect sizes: large enough to detect even the subtlest influences of the different variable combinations on user experience and outcomes. By adhering to a stringent threshold for significance, typically a p-value less than 0.05, organizations can make data-driven decisions with greater certainty.

| Aspect of Multivariate Testing | Role in Establishing Significance |

|---|---|

| Number of Variations | Determines complexity of analysis and vulnerability to type I errors |

| Sample Size | Impacts the power of the test to detect true differences between variations |

| Effect Size | Influences detectability of meaningful outcomes from variations |

| p-Value Threshold | Sets the standard for statistical confidence in results |

Achieving Statistical Relevance in AB Tests

Achieving statistical relevance in AB testing signals to marketers the reliability of the data collected during the testing phase. By having a well-defined success criterion—often conversion rate or a related metric—the results of the experiment can be deemed statistically significant and actionable.

As AB testing typically revolves around comparing two variants, establishing statistical relevance entails understanding the sample size needed to observe a true difference in performance. This ensures results are not swayed by random fluctuation and reflect genuine user preferences and responses:

- Determining the minimum sample size for detecting performance differences.

- Using statistical methods to measure the certainty of observed outcomes.

- Continuously monitoring test data to confirm the endurance of results over time.

Testing Multiple Variables vs. Distinct Versions

In digital marketing, the pursuit of an optimal online experience that maximizes conversions hinges on deploying the right testing methodologies.

Marketers often stand at a crossroads, choosing between the broad scope of multivariate testing and the focused lens of AB testing.

Each approach offers distinct advantages and challenges: multivariate testing evaluates several variables concurrently to identify how combined changes impact user behavior, whereas AB testing iterates between distinct page versions to discern which singular change yields better performance.

This section will explore the fundamental principles of testing multiple variables through multivariate methods and contrast them with the AB test approach’s concentrated analysis of variant pages.

The Principle of Multiple Variable Testing

The Principle of Multiple Variable Testing encapsulates a refined approach wherein marketers and data analysts evaluate the collective effects of various page elements on user behavior and conversion metrics. This technique, often deployed in complex scenarios, scrutinizes the synergy between different design factors—images, CTAs, headlines, and more—to uncover how they coalesce to influence visitor interactions.

Distinguished by its comprehensive nature, multivariate testing equips organizations with granular insights, facilitating a deeper understanding of the intricate web of factors that contribute to a successful user journey. It is a multifaceted tool that transcends the limitations of binary testing, offering a kaleidoscopic view of potential website optimizations.

Testing Variant Pages: The AB Test Approach

The AB testing approach thrives on its clarity, providing a stark contrast to multivariate testing through its sequential examination of distinct page versions. It empowers marketers to pilot a singular variable and observe its direct influence on user engagement and conversion rates, without the complexities of multiple variable interactions.

Deployed effectively, AB testing offers expedited insights by directly pinning one version against another, enabling marketers to swiftly identify which alterations advance their digital marketing objectives. This focused strategy refines the optimization process, ensuring each design or content change enhances the user’s journey towards the desired action.

| Strategy | Focus | Variables | Outcome |

|---|---|---|---|

| Multivariate Testing | Interaction effects | Multiple | Comprehensive insight |

| AB Testing | Direct influence | Single | Quick iteration |

Multivariate vs. AB Testing: Which Yields Quicker Insights?

Within the competitive landscape of digital marketing, the velocity with which insights are garnered from testing can significantly sway a company’s ability to adapt and thrive.

Multivariate and AB testing each bestow unique advantages regarding the speed of result generation, serving distinct yet equally paramount roles in a marketer’s toolkit.

As businesses quest to optimize user experiences swiftly and effectively, understanding the nuances between the rapid conclusions drawn from AB testing and the potentially broader, yet slower, insights delivered by multivariate testing becomes critical.

This introduction paves the way for an in-depth comparison of the pace at which these testing methods yield actionable data, guiding digital strategies across diverse online campaigns.

Speed of Generating Results in Multivariate Testing

The velocity at which multivariate testing provides actionable insights is inherently slower than AB testing, due to its comprehensive analysis of multiple variables. The necessity for expansive data to validate the findings means that multivariate tests must run until statistically significant results are obtained from the complex interplay of tested page elements.

While the multivariate approach facilitates a deeper understanding of how various design elements interact to affect user behavior and conversion rates, this granulation requires accumulation of ample traffic across all variations. As a result, the compilation of sufficient data to inform strategic decisions is a process that unfolds over a more extended period compared to its AB testing counterpart.

AB Testing and the Race for Rapid Conclusions

AB Testing excels in delivering expedient feedback to marketers, distinguishing itself as a favored method for those prioritizing speed in their optimization cycle. The concentration on a single variable reduces complexity and shortens the duration needed to gather meaningful insights, streamlining the entire testing process and expediting data-driven decision-making.

Organizations harness the nimbleness of AB Testing to quickly iterate on web pages, allowing for the swift rollout of enhanced features or design changes that resonate with their audience. This agility is critical in an ever-evolving digital landscape where user trends and competitive dynamics shift rapidly, granting businesses the ability to adapt and optimize promptly.

Deciding Between Multivariate Testing and AB Testing

In the dynamic environment of digital marketing, where each click and interaction unfolds a unique narrative, selecting the right testing method becomes critical.

Professionals must navigate through a matrix of considerations when determining whether to employ multivariate or AB testing techniques.

Aspects such as the complexity inherent to a given hypothesis and the availability of resources to conduct a thorough analysis are vital inputs in this decision-making process.

This makes the pathway towards a test type not only a tactical choice but a strategic decision tailored to meet specific marketing objectives and operational capacities.

Factors to Consider in Choosing a Test Type

When weighing the choice between multivariate and AB testing, there are a few key factors that must be at the forefront of a digital marketer’s consideration. Foremost, the specific objectives of the campaign and the complexity of the digital asset in focus will dictate the appropriateness of each testing type, with multivariate testing suitable for in-depth analysis of multiple variables and AB testing ideal for simpler, more focused experiments.

Additionally, the volume of available website traffic and the resource capacity of the organization play a crucial role in this decision. While multivariate testing requires high visitor numbers to produce statistically significant insights, AB testing can be conducted with less traffic and typically demands fewer resources, both in time and manpower, to reach conclusive results.

Evaluating the Complexity of Your Hypothesis

Evaluating the complexity of one’s hypothesis is the critical first step when discerning which testing process to apply. Multivariate testing is the approach of choice when the hypothesis assumes that multiple variables could interact in complex ways to affect the user experience and ultimately, the site’s conversion rates.

If, however, the hypothesis is straightforward, predicting that a singular change could have a considerable impact on visitor behavior, AB testing is deemed more suitable. This distinction empowers marketers to deploy resources and fine-tune digital marketing strategies efficiently, aligning with their specific experimental predictions.

Assessing the Resources Available for Testing

An organization’s capacity to engage in either multivariate or AB testing hinges on a practical assessment of available resources, which includes expertise, time, and technological infrastructure. Multivariate testing, with its intricate array of variables requiring simultaneous evaluation, often demands more Sophisticated Statistical Knowledge and a more robust technological setup, which can constrain leaner marketing teams or those with limited analytical tools.

Conversely, AB testing, characterized by its comparative simplicity, is more accessible for organizations with fewer resources. This method allows for quicker turnaround times and demands less analytical complexity, making it a viable option for teams aiming to Optimize User Experience without the need for intensive data analysis or substantial investment in advanced testing software.

Conclusion

Understanding the differences between multivariate and AB testing is vital for digital marketing success.

Multivariate testing offers a comprehensive analysis of how various page elements interact and affect user behavior, ideal for high-traffic sites requiring depth in data.

It enables organizations to pinpoint precise design improvements but demands significant traffic and resources.

In contrast, AB testing excels in simplicity, focusing on the impact of a single variable.

This approach suits environments where rapid insights are crucial for making swift optimizations with fewer resources.

Choosing the right testing method hinges on campaign objectives, traffic volume, resource availability, and hypothesis complexity.

Ultimately, a strategic grasp of both testing types equips marketers to enhance user experience and drive conversions effectively.