The Definitive Guide to SEO Log File Analysis: Unleashing Insights for Optimization

Mastering SEO Log File Analysis: Key Strategies for Website Optimization SEO log file analysis unveils treasures of insights for businesses seeking to optimize their website’s performance in […]

Mastering SEO Log File Analysis: Key Strategies for Website Optimization

SEO log file analysis unveils treasures of insights for businesses seeking to optimize their website’s performance in search engine rankings.

By dissecting the raw data that search engine bots leave behind, companies can refine their SEO strategies with precision, ensuring they cater to both the algorithmic nuances of search engines and the preferences of their user base.

LinkGraph’s SEO services transform this often-overlooked asset into actionable tactics, elevating client engagement through meticulous examination of server logs.

Implementing optimizations rooted in solid log file data stands as a cornerstone of successful SEO practices.

Keep reading to unlock the advanced techniques that turn log file analysis into a competitive edge.

Key Takeaways

- Log File Analysis Is Crucial for Optimizing a Website’s SEO Performance and Search Visibility

- Automated Retrieval Systems for Server Log Data Enhance the Efficiency of SEO Strategies

- Detailed Understanding of Crawl Patterns Allows for Targeted Website Optimizations and Improved Indexing

- Server Response Codes Within Log Files Can Identify Technical Issues That Affect a Website’s Search Rankings

- Integrating Log File Insights Into an SEO Strategy Provides a Competitive Advantage in the Ever-Changing Digital Landscape

Exploring the Basics of Log File Analysis

Within the realm of Search Engine Optimization, log file analysis stands as a fundamental yet often underutilized practice.

These server-generated records—log files—offer a granular view of how search engine bots interact with a website.

By examining these digital footprints, SEO professionals can uncover invaluable insights into the search engine’s crawling patterns.

Breaking down log files reveals significant data types, from HTTP status codes to user agent details, each offering a different perspective on a website’s health and search visibility.

Recognizing the role of log files in SEO, professionals equipped with this knowledge can craft strategic interventions to enhance a site’s performance in search results.

Understanding What Log Files Are

Log files serve as the ledger of a website’s interactions with search engine crawlers, recording each visit down to the finest detail. Stored on the server, these files are comprehensive transaction logs that SEO professionals scrutinize to track the search engine bot’s every move on a site.

Each entry in a log file captures a wealth of information, including the requested URL, the timestamp of access, the status code returned, and the user agent of the requester. Analyzing this information provides SEO experts with a precise account of the website’s engagement with search bots:

- Uncovering crawl patterns that indicate how often Googlebot and other crawlers visit specific pages.

- Identifying frequent crawl errors which could hinder proper indexing and affect SERP rankings.

- Calculating crawl budget allocation to ensure vital pages receive appropriate attention from search engines.

Recognizing the Role of Log Files in SEO

SEO log file analysis presents a crucial component in a comprehensive SEO strategy, allowing LinkGraph’s professionals to insightfully assess a website’s interaction with search engine crawlers. By leveraging server log file data, an SEO expert can pinpoint areas that need optimization, from improving page response times to eliminating crawl errors, thus aligning the website’s performance with search engine algorithms for better SERP positioning.

LinkGraph’s adept use of log file data assists in judiciously allocating the crawl budget, a practice which ensures that a website’s most critical pages are frequently visited and indexed by search engine bots. This meticulous attention to detail in log file analysis substantiates the proactive approach taken by LinkGraph’s SEO services in identifying and addressing issues that could otherwise impede a website’s ability to rank effectively.

Identifying the Types of Data in a Log File

Delving into the intricacies of log file analysis begins with differentiating the data types captured by these records. Central to understanding a crawler’s interaction with a website, each log file entry documents elements like the IP address of the visitor, the specific resource accessed, denoted by the URI, and the response code indicating the server’s reply to the request.

Enhanced scrutiny of log files by LinkGraph’s SEO services reveals not just the pages crawled but also the temporal patterns of visits by search engine bots, distinguished by precise timestamps. This data becomes pivotal in constructing an SEO strategy that aligns with the rhythm of search engine activity, ensuring the most impactful pages are prioritized.

Gaining Access to Your Log Files for Analysis

Embarking on the journey of SEO log file analysis begins with accessing the wealth of data ensconced within a website’s server logs.

These digital archives are pivotal for understanding how search engine bots navigate and interact with a website, but acquiring this information poses its own set of challenges.

Experts at LinkGraph’s SEO services provide meticulous guidance on locating these crucial files, utilizing advanced tools and techniques to extract them efficiently.

Furthermore, they advocate for the implementation of automated retrieval systems, ensuring continuous log file acquisition—an essential step for maintaining a robust SEO strategy.

This process is integral to the optimization of a website’s performance in search engine rankings.

Locating Your Log Files on the Server

Beginning the log file analysis process necessitates gaining access to the trove of log files housed within a website’s server. Identifying the precise location of these files typically involves navigating the server’s file management system, be it cPanel for shared hosting environments or direct server access for dedicated hosting, which is an area where LinkGraph’s expertise simplifies the task for their clients.

Once into the server interface, LinkGraph’s SEO services assist in pinpointing the specific directory storing these log files, often found within the ‘logs’ or ‘statistics’ folders. The clear elucidation of the retrieval process, tailored to the server type—Apache, Nginx, or IIS—empowers website administrators to undertake this vital first step in SEO log file analysis with confidence.

Tools and Methods for Extracting Log Files

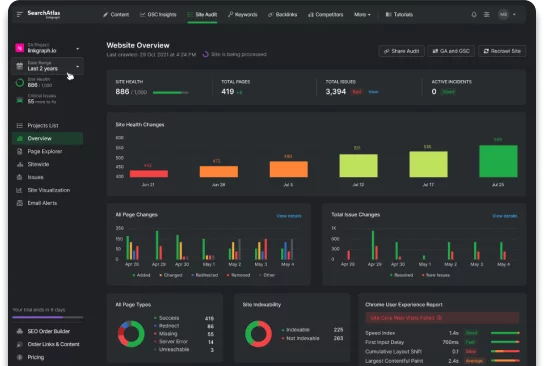

The extraction of log files is a technical process that requires specific tools and expertise. LinkGraph’s SEO services expertly utilize tools such as Screaming Frog Log File Analyser and the proprietary SearchAtlas SEO software to systematically extract and interpret the log data.

Navigation through a multitude of server environments is a common hurdle, yet LinkGraph’s seasoned specialists employ techniques compatible with various platforms including Apache, Nginx, and IIS, ensuring seamless access to log files for comprehensive analysis and strategic SEO implementation.

Setting Up Automated Log File Retrieval

Establishing an automated system for log file retrieval serves as a cornerstone in maintaining a consistent SEO log file analysis protocol. LinkGraph’s professionals excel in configuring routines that effortlessly gather log files, dramatically enhancing the efficiency and accuracy of ongoing SEO audits and strategies.

Automation ensures that the latest server log file data is readily available for examination, providing LinkGraph’s clients with a continuous stream of actionable insights. This systematic approach ensures peak website performance and aligns with the ever-evolving landscape of search engine algorithms.

| Step | Action | Benefit |

|---|---|---|

| 1 | Configuration of automated log retrieval systems | Enables consistent data collection |

| 2 | Extraction of up-to-date log files | Facilitates timely log analysis |

| 3 | Utilization of insights for SEO strategy refinement | Improves search engine rankings and website performance |

Unveiling the Importance of Log File Analysis

The strategic analysis of server log files is an indispensable component for diagnosing the vitality of a website and honing its optimization for search engines.

These critical records, often overlooked, provide a diagnostic lens through which the efficacy of a website’s interactions with search engine bots can be evaluated.

Illuminating areas for potential enhancement, log file data serves as a compass for SEO specialists at LinkGraph, guiding the refinement of SEO strategies and accelerating the journey towards superior SEO performance.

In dissecting log file intricacies, one can unearth the depth of their influence on a website’s search standing, translating cryptic server records into robust, data-driven SEO campaigns.

Evaluating Site Health Through Log Files

Evaluating a website’s health transcends mere surface analysis; it requires peering into the digital exchange between search engine crawlers and the web server. By analyzing log file data, LinkGraph’s SEO services enable the detection of anomalies that could reflect issues such as slow page response times or unindexed pages, both of which are crucial for maintaining optimal site performance in the eyes of search algorithms.

LinkGraph directs focused scrutiny towards the subtleties captured in log files, like the frequency and pattern of search engine bot visits, to assess the health of a client’s digital property. Through this in-depth review, their SEO services are adept at identifying technical impediments that could obstruct a seamless user experience and diminish a website’s search engine standing.

Learning How Log Files Impact SEO Performance

Log file analysis directly influences SEO performance by shedding light on search engine crawler behavior. Understanding how crawlers access and navigate a website helps SEO experts identify which areas are performing well and which need improvement to optimize search rankings.

Consistent log file analysis provided by LinkGraph’s SEO services informs clients about their site’s accessibility and indexability: critical factors that determine online visibility and user experience. This intricate process allows for strategic adjustments, ensuring that a website adheres to search engine best practices and remains competitive:

- Highlighting the most and least accessed pages helps refine internal linking and content visibility strategies.

- Analyzing response codes from the server can lead to necessary technical optimizations to improve site health.

- Insights into crawl frequency guide content updates and architecture adjustments to better cater to search engine algorithms.

Using Log Data to Inform SEO Strategies

To harness the full potential of SEO, integrating log data into the formulation of strategies is pivotal. LinkGraph’s SEO services tap into this resource, using insights from server log files to tweak and refine their clients’ campaigns for maximum search engine affinity and efficiency.

LinkGraph’s mastery in interpreting log file patterns is central to developing SEO tactics aimed at elevating a website’s search engine performance. The data procured can explicitly guide adjustments in a website’s technical framework, informing decisions from content refresh cycles to structural tweaks with precision:

- Analyzing server responses aids in sculpting a robust structural foundation that promotes efficient crawling and indexing.

- Understanding bot behavior enlightens the strategic spread of new content, ensuring alignment with existing search demand.

- Insights from timestamp correlations between log data and user engagement support the optimization of content delivery timings.

Conducting in-Depth SEO Log File Analysis

Embarking on a comprehensive SEO log file analysis illuminates the intricate dance between server and crawler, a critical component of any successful optimization strategy.

For those committed to refining their website’s performance, an in-depth examination of server logs reveals a trove of data that serves as the foundation for informed decision-making.

From scrutinizing status codes to discern underlying issues to measuring indexability and understanding the dynamics of crawler activity across web pages, log file information holds the power to dramatically shape a site’s SEO destiny.

An analysis that considers crawl depth, observes trends over instances, and contrasts the nuances of desktop versus mobile bot behavior, equips professionals with actionable insights, underscoring the profound impact of a nuanced log file evaluation on overarching SEO success.

Analyzing Status Codes to Uncover Issues

Analyzing status codes within log files is a critical step for diagnosing potential roadblocks that could stifle a website’s search engine visibility. LinkGraph’s SEO services meticulously examine these codes, which serve as indicators of a website’s response to crawler requests, identifying errors such as 404 Page Not Found or 500 Internal Server Error that can have deleterious effects on SEO performance.

Through diligent assessment, the SEO specialists at LinkGraph use status code data to detect patterns of systemic issues, enabling the swift resolution of problems that hinder user experience and impede a site’s ability to rank. This proactive approach allows for refined adjustments to a website’s infrastructure, ensuring seamless navigation for both users and search engine bots alike.

Assessing Indexability From Log File Data

Log file data’s indexability aspect is a critical determinant in how effectively a website’s content is discovered and interpreted by search engines. LinkGraph’s SEO services methodically evaluate this data to ensure search engine crawlers are indexing the site’s most crucial pages, which in turn, bolsters the site’s visibility and enhances user experience.

Through this meticulous scrutiny of indexability, LinkGraph identifies any discrepancies in bot behavior, such as pages that are frequently crawled but not indexed, and implements necessary modifications to enhance a website’s SEO framework:

- Correlating crawl frequency with indexation to pinpoint content that warrants optimization.

- Identifying and resolving issues around directive discrepancies, including ‘noindex’ tags and canonical conflicts.

- Evaluating robots.txt files and XML sitemaps to ensure consistency and accessibility for search engine bots.

This strategic approach to log file analysis is instrumental in fine-tuning the visibility of a website’s pages, thereby laying the groundwork for improved organic search performance and a stronger online presence.

Identifying Most and Least Crawled Pages

In the domain of SEO log file analysis, discerning the pages that attract the most and least search engine crawler activity is paramount. LinkGraph’s SEO services excel in highlighting these pages, enabling website owners to comprehend the distribution of crawler attention, which in turn, signals content and structural optimizations that may be required.

LinkGraph’s meticulous analysis points to the frequency of crawler visits as an indicator of the value search engines ascribe to different web pages. This insight is crucial for SEO professionals who aim to enhance the visibility of under-crawled pages, ensuring content across the website achieves balanced recognition and indexing by search engines.

Understanding Crawl Depth and Its Effect on SEO

Grasping the concept of crawl depth is fundamental for SEO advancement, as it reflects how many clicks away from the homepage a page is located. LinkGraph’s SEO services acknowledge that pages buried deeper in a website may receive lesser attention from search engine bots, potentially impacting their contribution to the site’s SEO stature. The company’s specialists strive to optimize site architecture, ensuring pivotal content is reachable within a minimal number of clicks, which is integral for superior search engine visibility.

In the undertakings of SEO professionals, the measurement of crawl depth offers perspective on a website’s content hierarchy and its accessibility to crawlers. With a deep understanding of this aspect, LinkGraph’s team crafts strategies to enhance search engine crawling efficiently, thus amplifying the chances for a more diverse set of pages to rank well. The mastery over this element of log file analysis ensures that LinkGraph’s clients experience an uptick in the SEO value derived from each webpage.

Examining Log File Trends Over Time for Insight

Investigative analysis of log file trends over time stands as a crucial strategy for discerning search engine behavior evolution and its implications for website optimization. LinkGraph’s SEO services harness this longitudinal data to unravel patterns that reflect the seasonality of crawler activities, enabling them to adjust SEO strategies responsively and proactively.

Through this continuous observation, LinkGraph’s experts can identify long-term shifts in search engine algorithms and user behavior, ensuring that clients’ websites remain aligned with current standards and practices. This strategic foresight facilitates the anticipation of necessary alterations to a site’s SEO blueprint, ultimately safeguarding its sustained search engine relevance and performance.

Comparing Desktop and Mobile Crawling Behavior

In the nuanced world of SEO, understanding the divergences in desktop and mobile crawling behaviors is imperative for creating adaptive strategies. LinkGraph’s SEO services meticulously parse log file data to delineate these behaviors, recognizing that mobile friendliness now dictates a significant portion of search engine rankings.

With the advent of mobile-first indexing, LinkGraph’s insights into crawler interactions enable the fine-tuning of technical SEO elements. This ensures that websites deliver optimal experiences across all devices, a critical factor in today’s search landscape where user engagement can vary significantly between desktop and mobile interfaces.

Implementing SEO Optimizations Based on Log Analysis

The meticulous dissection of server log files reveals not only the current state of a website’s interactions with search engines but also illuminates a pathway to its potential zenith in SEO performance.

As industry leaders pivot towards data-driven decision-making, the insights extracted from log file analysis by LinkGraph’s SEO services become the architects of surgical SEO enhancements.

Addressing issues such as resolving errors and broken links, fortifying a website’s structure to facilitate seamless crawling, and amplifying crawl budget efficiency, transforms log data into strategic SEO currency.

Armed with this intelligence, LinkGraph crafts precision-based optimization that systematically elevates a site’s prominence and authority in the digital space.

Resolving Errors and Broken Links

Log file analysis serves as a diagnostic tool in identifying a spectrum of errors and broken links that may be obstructing a website’s SEO health. The intervention to rectify these issues, informed by the meticulous breakdown of log file data by LinkGraph’s SEO services, often leads to significant improvements in search engine indexing efficiency and user experience.

Upon detecting error status codes and the lurking presence of broken links, the specialized team at LinkGraph swiftly implements corrective actions. This targeted approach not only restores functionality but also solidifies a website’s structural integrity, enhancing its ability to garner favorable search engine attention.

| Log File Insight | Detected Issue | SEO Optimization Implemented |

|---|---|---|

| 404 Error Status Codes | Broken or Dead Links | Link Repair or Redirection |

| 500 Error Status Codes | Server Errors | Server Configuration Adjustments |

| 301/302 Status Codes | Improper Redirects | Redirection Audit and Correction |

Enhancing Site Structure for Optimal Crawling

Masterful site structure optimization is a direct offshoot of meticulous log file analysis, an area where LinkGraph’s SEO services thrive. By unraveling the patterns of crawler behavior from log files, the team strategically refines a website’s architecture to facilitate more efficient search engine crawling and indexing, ensuring that no page of SEO significance goes unnoticed.

LinkGraph’s professionals apply these insights, optimizing the hierarchy and navigability of content to reduce crawl depth. Such technical modifications provide a streamlined path for search engine bots, significantly bolstering the probability of capturing and retaining their attention on pivotal areas of a client’s online presence.

Improving Crawl Budget Efficiency With Log Data

Optimizing crawl budget is a strategic component of SEO, centered on maximizing a search engine’s efficient review of web pages within the constraints of a site’s crawl quota. LinkGraph’s SEO services excel in applying log file data to identify and resolve factors that consume unnecessary crawl budget, such as redirect chains or unclean URLs, ensuring that search engine bots spend their allotted time on pages that drive value and ranking potential.

Critical to this process is the dissection of log data to ascertain which areas of a website are being over-crawled or overlooked. LinkGraph’s SEO experts skillfully allocate crawl budget by prioritizing the indexing of new or updated content, hence escalating its visibility and impact:

- Streamlining the crawl path by minimizing unnecessary redirects and optimizing internal link structures.

- Enhancing the content refresh rate for critical pages to signal their importance to search engines.

- Ensuring that high-value pages are easily accessible, thus commanding appropriate attention from crawlers.

Advanced Techniques in SEO Log File Analysis

Advances in SEO log file analysis have furnished practitioners with sophisticated methods to refine the user experience and bolster search rankings.

Embracing techniques that delve into server log data offers an unparalleled vantage point to assess and accelerate site speed, pinpoint and rectify crawl anomalies, and weave these granular insights into the fabric of overarching SEO tactics.

Such refined analysis stands at the core of LinkGraph’s SEO services, enabling a data-centric approach to optimizing both website performance and search engine interactions.

The capacity to pivot strategy based on log file intelligence distinguishes a proficient SEO practice, securing a competitive edge in a digital ecosystem driven by precision and adaptability.

Leveraging Log File Data for Site Speed Optimizations

Through meticulous log file analysis, LinkGraph’s SEO services effectively optimize site speed, a critical factor in user experience and search engine ranking. By scrutinizing server response times logged for each crawler visit, inefficiencies in loading times are identified and targeted for improvement, ensuring that search engine bots and users alike can access content swiftly and without delay.

LinkGraph leverages the data obtained from log files to ascertain specific pages that suffer from longer load times, which could negatively impact a visitor’s engagement and the site’s bounce rates. Expert analysis pinpoints the underlying technical issues, from server performance to resource-heavy page elements, facilitating targeted optimizations that result in a faster, more efficient website.

Identifying Crawl Anomalies and Their Solutions

LinkGraph’s SEO services excel at pinpointing crawl anomalies, such as unexpected spikes in bot activity or pages that are overlooked by search engines. Their experts deploy advanced analytics to detect these irregularities, enabling the implementation of tailored solutions that address specific crawl issues, thus smoothing the path for search engines and enhancing website visibility.

The solutions devised by LinkGraph for identified crawl anomalies often involve tactical adjustments in a site’s structure or meta directives. These precise modifications align closely with search engine guidelines, ensuring that crawling and indexing processes are optimized to favor the client’s website, augmenting its ability to achieve and maintain superior search rankings.

Integrating Log File Insights Into Overall SEO Tactics

In the pursuit of mastery over Search Engine Optimization techniques, the integration of log file insights into the broader SEO paradigm emerges as a vital practice. LinkGraph’s SEO services, cognizant of this fact, seamlessly weave in-depth log analysis findings into their strategic framework, effectively transforming raw data into actionable directives for website optimization.

By contextualizing log file data within the overarching goals of an SEO campaign, LinkGraph ensures that every optimization effort is precisely attuned to the nuances of search engine algorithms and the website’s specific needs. The result is an SEO blueprint that is not only data-driven but also responsive to the dynamic landscape of search behavior and engine updates:

| SEO Goal | Log File Insight | Tactical Implementation |

|---|---|---|

| Enhanced Page Indexation | Frequent Crawler Visits | Optimized Sitemap Integration |

| Reduced Page Load Time | Extended Server Response | Resource Loading Optimization |

| Improved Crawl Budget Allocation | Waste on Redirect Chains | Streamlined URL Structure |

Conclusion

Mastering SEO log file analysis is essential for optimizing website performance and search engine rankings.

By thoroughly examining server logs, SEO professionals can gain crucial insights into how search engine bots interact with a website.

LinkGraph’s SEO services showcase the breadth of optimization opportunities that log file analysis presents, from resolving errors and enhancing site structure to improving crawl budget efficiency and site speed.

Log file data enables the identification of crawl patterns, informs content visibility strategies, and supports technical optimizations crucial for search engines.

Overall, leveraging log file analysis informs strategic SEO decisions, ensuring a website remains aligned with evolving search engine standards and practices, ultimately driving superior online visibility and user engagement.