Website Not on Google? Here’s Why.

Troubleshooting Invisible Websites: Uncover Why Google Hasn’t Listed Your Site When a company’s website fails to display in Google search results, the impact on its visibility can […]

Troubleshooting Invisible Websites: Uncover Why Google Hasn’t Listed Your Site

When a company’s website fails to display in Google search results, the impact on its visibility can be detrimental to online success.

It’s a problem that requires immediate attention, dissecting a myriad of potential issues ranging from technical glitches to flawed SEO strategies.

The key to solving this enigma lies in a methodical approach – diagnosing the underlying causes and applying targeted corrections.

LinkGraph’s comprehensive SEO services offer solutions to unearth and rectify the issues holding a website back from its rightful place in the digital landscape.

Keep reading to unveil how to elevate your site from the depths of invisibility to the prominence of the first page.

Key Takeaways

- Proper Sitemap Submission and Crawler Accessibility Are Vital for Website Visibility in Search Results

- Resolving Technical SEO Issues, Like Incorrect robots.txt Configurations or Noindex Tags, Is Crucial for Indexing and Visibility

- Backlinks Must Be Relevant and Authoritative to Positively Impact Website Credibility and Search Rankings

- Aligning Content With User Search Intent and Strategically Including Targeted Keywords Are Key to Improving SERP Positioning

- Employing Advanced SEO Tools Assists in Identifying Long-Tail Keywords to Target Specific Niches and Improve Organic Traffic

Understanding the Basics of Search Engine Visibility

In the intricate world of digital visibility, the absence of a website from Google’s search results can befuddle even the most astute business owners.

LinkGraph’s SEO services recognize that a firm grasp of search engine mechanics is foundational for troubleshooting this issue.

Through exploring how search engines scout the vast online landscape for new content, businesses can appreciate the paramount role sitemaps play in bolstering website discoverability.

Furthermore, acquiring knowledge about the indexing process is essential to ensure a website’s pages are not merely floating in cyberspace but are anchored firmly within the search engine’s radar, primed for user queries.

Learn How Search Engines Discover Websites

At the heart of a search engine’s discovery process lies the tireless work of crawlers, sophisticated bots that navigate the web’s interconnected matrix. These digital pioneers are tasked with sifting through countless websites, identifying fresh and updated content to add to their vast indexes.

The initiation of this undertaking is coded within the sitemap URL, a beacon that flags the presence of new or modified pages to the search engine crawler. Properly submitted sitemaps accelerate the discovery phase, guiding Googlebot through the website’s structure as it assesses which pages will satisfy a searcher’s query.

| Site Visibility Factor | Role in SEO | Impact on Google Listing |

|---|---|---|

| Sitemap Submission | Heralds website structure to search engines | Enhances speed of content discovery and indexing |

| Crawler Accessibility | Ensures bots can navigate and evaluate pages | Directly influences site’s inclusion in search results |

| Content Freshness | Demonstrates site’s relevance and timeliness | Improves chances of ranking for targeted keywords |

Understand the Impact of Sitemaps for Visibility

Sitemaps serve as an organized directory for search engines, eloquently presenting a website’s structure. This roadmap smartly prioritizes content, enabling Google Search Central’s systematic approach to discovering and indexing web pages efficiently.

In a realm where user experience dictates the prominence of website pages, sitemaps contribute significantly to a site’s SEO performance. LinkGraph’s Tailored SEO Services assist businesses in creating and optimizing sitemaps, ensuring every valuable page stands out to search engines and users alike.

Get Familiar With the Indexing Process

Grasping the indexing process is akin to understanding the gatekeeping mechanisms that dictate content’s admission into the search landscape. It is during this phase that Google’s algorithms evaluate a site’s pages, scrutinizing factors like relevancy and quality to determine their suitability for user searches.

When a page aligns with Google’s meticulous criteria, it earns its place in the search giant’s index, a comprehensive database ready to be summoned by a relevant search query. Thus, it is crucial that site architects employ strategic on-page SEO services to enhance their content’s indexability, aligning with the factors Googlebot considers during this critical assessment.

| Indexing Factor | Role in SEO | Impact on Search Visibility |

|---|---|---|

| Quality Content | Signals value to search algorithms | Boosts likelihood of being indexed and ranked |

| Relevancy | Connects with user intent | Ensures pages meet the specific needs of searchers |

| SEO Optimization | Enhances content discoverability | Raises chances of higher visibility in search results |

Confirm Your Site’s Index Status in Google

Unraveling the mystery behind a website’s absence from Google’s listings can be a critical concern for companies vying for online prominence.

Ascertaining a website’s index status sets the stage for strategic remediation and the restoration of visibility in the search landscape.

Employing the straightforward “Site:” query or delving into the insightful data offered by Google Search Console can empower site administrators to uncover indexation status, driving informed decisions that sculpt a website’s pathway to high search engine ranking and robust user engagement.

Use the “Site:” Query to Check Your Status

Site administrators often turn to the “Site:” search operator as a preliminary check on their website’s presence in Google’s index. By inputting “site:domain.com” into Google’s search bar, they can immediately see if pages from their domain are indexed and appearing in search results.

This search operator provides a quick snapshot of a site’s index status, giving clarity whether Google has acknowledged a website’s content. LinkGraph’s SEO Expertise supports clients in interpreting these query outcomes, effectively diagnosing any underlying issues with indexing.

Verify Visibility Through Google Search Console

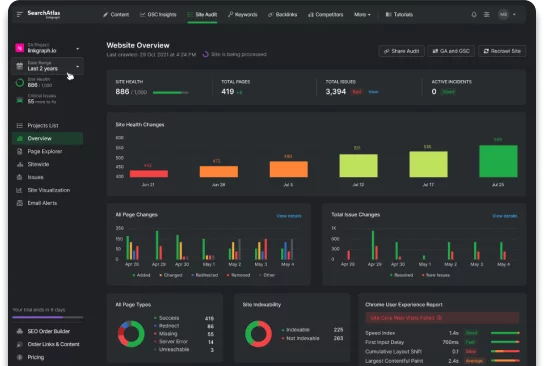

In their quest for online visibility, businesses can wield the power of Google Search Console, a vital tool that offers a transparent lens into a site’s index status. The console delivers precise insights, allowing LinkGraph’s team to identify and rectify pages that fail to appear in search results, thereby optimizing a website’s visibility.

By harnessing the full potential of Google Search Console, LinkGraph meticulously examines a site’s performance metrics, from indexed pages to search engine crawler behaviors. This allows for an accurate assessment of any SEO issues hobbling visibility, thus laying the groundwork for targeted enhancements that elevate a site’s stature within the Google search ecosystem.

Identifying and Resolving Indexing Blocks

When the visibility of a website is shrouded in the shadows of Google’s vast search landscape, pinpointing the cause becomes paramount to reestablishing a digital presence.

Within the scope of LinkGraph’s SEO services, identifying and resolving indexing blocks stands out as a critical endeavor for businesses whose sites have mistakenly slipped through the crevices of Google’s indexing process.

This entails a comprehensive analysis of common indexing obstacles, including examining the robots.txt file for configurations that inadvertently prohibit search engines, as well as scouring the site’s pages for noindex tags that may have been applied in error.

Both actions are essential to clearing the path for search engines to reflect a website’s updated status in their listings.

Detecting Common Indexing Obstacles on Your Site

Finding why a website remains unseen by search engines like Google begins with a meticulous check for common indexing obstacles. LinkGraph’s SEO services are designed to uncover technical issues, such as incorrect robots.txt file configurations or misplaced noindex meta tags, which may be hindering a website’s visibility.

These barriers can inadvertently block search engine crawlers from accessing and indexing website pages. Addressing such setbacks requires an analytical approach to reviewing and adjusting the site’s metadata and directives:

- Reviewing the robots.txt file to ensure it permits crawler access to important site areas.

- Scanning for noindex meta tags across web pages that should be available to the search index.

- Confirming correct HTTP status codes are delivered, with a particular focus on eliminating the 4XX and 5XX server errors that can deter indexing.

Correcting Improper robots.txt Configurations

An erroneous robots.txt setup can act as an inadvertent gatekeeper, barring Google’s search engine crawlers from indexing pages meant to be public. Therefore, one of the pivotal tasks for LinkGraph’s SEO services is to scrutinize and optimize this critical file, ensuring it aligns perfectly with Google’s indexing protocols.

Correcting a misconfigured robots.txt requires a precise strategy tailored to the unique landscape of a site’s online structure:

- Thorough analysis and rewriting of directives to promote optimal crawler access.

- Validation of changes through Google Search Console to confirm the correct pages are allowed for indexing.

- Continuous monitoring to ensure ongoing compliance with best practices for robots.txt configurations.

These corrections, implemented by LinkGraph, smooth the trail for crawlers, amplifying a website’s visibility and propelling it towards the forefront of Google Search results. Utilizing active, strategic interventions, LinkGraph adeptly navigates the intricacies of robots.txt adjustments, thereby restoring clients’ sites to their rightful place in search engine listings.

Removing Unintentional Noindex Tags

Within the arsenal of LinkGraph’s SEO services, meticulous attention is paid to the rectification of unintentional noindex tags that prevent pages from surfacing in search results. Corrective action involves comprehensive site scans to locate and remove any such tags, reinstating the pages’ eligibility for indexing by search engines.

Amending these noindex directives not only facilitates the rightful indexation of website pages but also aligns with the myriad of nuances that define a robust SEO content strategy. LinkGraph’s expertise ensures that every webpage is precisely crafted to invite search engine attention, making each client’s digital footprint more prominent.

Evaluating the Quality of Your Backlinks

Amid the quest for online visibility, the calibre of a website’s backlink profile is a decisive factor influencing its standing in Google’s search results.

LinkGraph’s SEO services encompass a deep evaluation of backlinks to distinguish those that bestow authority and relevance from those that may be detrimental to a site’s reputation.

A bespoke examination stresses the importance of nurturing a backlink profile that resonates with Google’s quality guidelines, while the meticulous action of disavowing toxic links protects the integrity of a website’s digital presence.

Assess Backlink Relevance and Authority

In the vast ecosystem of search engine rankings, the relevance and authority of backlinks cannot be overstated. LinkGraph’s meticulous white-label link building services are dedicated to evaluating the relevance of each backlink to ensure it aligns with the client’s content strategy and operates within Google’s quality parameters.

Authority is equally critical, as it serves as a testament to the trustworthiness of the website within its niche. LinkGraph employs comprehensive tools to measure the domain authority of linking pages, thereby ensuring clients benefit from the broad benefits of backlinks that reinforce their website’s credibility:

| Backlink Factor | SEO Significance | LinkGraph’s Approach |

|---|---|---|

| Relevance | Aligns backlinks with content and user intent | Analyzes content match and targets industry-related sites |

| Authority | Enhances website’s trust factor in SERPs | Assesses domain strength to optimize link quality |

Identify and Disavow Toxic Backlinks

The digital terrain is rife with backlinks that can be more of a liability than an asset to a website’s search engine standing. LinkGraph’s SEO services provide an essential toxic link identification process, sifting through a site’s backlink profile with precision to flag detrimental links that could sour Google’s perception of a site’s quality.

Purging these harmful links from a site’s profile is crucial for maintaining SEO health and safeguarding its reputation. The disavowal tool offered by LinkGraph becomes instrumental in this maintenance, communicating to search engines which links should be ignored during their assessment of a site’s backlink environment:

- Analyzing the backlink profile for unnatural or spammy links.

- Compiling a list of detrimental links for disavowal submission.

- Employing LinkGraph’s expertise to navigate the disavowal process effectively.

Increasing Your Website’s Authority and Trust

For a business’s online presence to thrive in the competitive digital marketplace, advancing the website’s authority and trust is paramount.

The core elements that underpin an authoritative site include not only the depth and value of the content it offers but also the connections and recognition it garners within the industry.

These aspects serve as critical barometers for Google’s algorithms when evaluating a site’s credibility and worthiness of a higher search ranking.

To ensure a website rises above obscurity, LinkGraph’s SEO services concentrate on creating content with unwavering quality and forging significant industry relationships that signal trust and authority to search engines.

Produce High-Value, Authoritative Content

LinkGraph’s commitment to fostering robust SEO hinges on crafting content that radiates authority and bestows value. Assertive blog writing services coupled with the deployment of advanced tools such as an SEO AI Writer underscore this approach, magnifying a website’s reputation and elevating its stature among industry peers and search engines alike.

In the quest for PageRank ascension, LinkGraph meticulously orchestrates an SEO content strategy that harmonizes with Google’s comprehensive ranking factors guide. The commitment to producing information-rich, user-centric content not only engages readers but also signals to Google the substantive merit of each piece:

- Strategically crafting detailed, informative content that proceeds beyond the surface level to provide unique insights.

- Utilizing compelling SEO tools and readability tools to fine-tune content, ensuring it meets the discerning standards of both users and search engines.

- Leveraging guest posting services to distribute high-quality content across reputable platforms, further solidifying the website’s authority.

Engage With Prominent Industry Players and Platforms

LinkGraph’s white label SEO services strive to enhance a website’s trust by fostering affiliations and visibility on esteemed industry platforms. By establishing connections and sharing expertise through authorship and partnerships, a company solidifies its niche authority—a factor that resonates with Google’s algorithms for higher rankings.

Recognizing that relationship-building plays a vital role in SEO, LinkGraph actively pursues collaborations that provide exposure to wider audiences. This strategy not only injects credibility but also invokes the broad benefits of backlinks from respected industry sources, reinforcing a site’s trust factor in the competitive digital arena.

Ensuring Content Matches Search Intent

Businesses seeking visibility in Google’s vast digital expanse must heed the critical junction where website content precisely intersects with user search intent.

At this crossroads, the harmony between what users seek and what a site provides is paramount to surfacing in search results.

LinkGraph’s adept SEO services focus on deciphering the intricacies of user queries, pinpointing targeted keywords, and meticulously aligning content with the core needs of an audience.

In an era marked by sophisticated search algorithms, understanding and catering to search intent not only demystifies the conundrum of online invisibility but sets the stage for amplified engagement and conversion.

Analyze User Queries for Targeted Keywords

In the pursuit of aligning a website’s content with user search intent, LinkGraph’s SEO services rigorously analyze user queries to identify targeted keywords. These queries serve as indicators, revealing the precise terminologies that users deploy when looking for information, products, or services, thereby guiding the content creation process to satisfy those needs.

Understanding the nuance behind each search term allows LinkGraph’s experts to streamline a website’s content strategy, ensuring that every page resonates with the user’s quest for information. By matching content with the searcher’s intent, LinkGraph significantly enhances the likelihood of a website’s pages earning prominence in Google’s meticulously curated search results.

Align Website Content With Audience Needs

LinkGraph’s meticulous local SEO services are instrumental in tailoring content that addresses the specific needs of a regional customer base. By honing in on local nuances and preferences, LinkGraph ensures that a website speaks directly to the community it serves, thereby increasing the relevance of its pages to geographically-targeted searches.

With an unwavering focus on user experience, LinkGraph’s on-page SEO services meticulously adjust content to fulfill audience expectations. This dedication to matching content with audience demand not only nurtures customer satisfaction but also signals to search engines the site’s alignment with user intent, optimizing the potential for improved search rankings.

Addressing Duplicate Content Problems

An often-overlooked yet significant tributary to the issue of a website’s absence from Google’s search results is the presence of duplicate content.

Mismanagement in this domain not only dilutes the perceived value of a website but can also result in search engines struggling to distinguish between original and replicated pages.

It is incumbent upon site managers to navigate the nuanced landscape of content duplication with precision, employing strategies such as the correct use of canonical tags and the intelligent consolidation of similar content.

The implementation of these measures bolsters the originality and quality of the site’s offerings, thereby clarifying its standing to search engines and enhancing visibility.

Utilize Canonical Tags Correctly

In the realm of search engine optimization, correctly implementing canonical tags is essential for signaling to Google which version of a content piece is paramount. This mark of distinction assists Googlebot in identifying the URL that should be considered the authoritative source, thus averting the issues that arise from duplicate content.

p>Canonical tags act as guides for search engines, instructing them to attribute SEO value to a specified URL and consolidate ranking signals appropriately. Their deployment fortifies the clarity of website pages, ensuring that each piece of content adds distinct value to the site’s search engine footprint:

| Problem Area | Canonical Tags Role | Desired Outcome |

|---|---|---|

| Duplicate Content Across Domains | Signal original content source across different websites | Accumulation of SEO value at original source URL |

| Similar Content Within Site | Clarify the preferred URL for search engines | Prevent dilution of ranking signals within the site |

Consolidate Similar Content to Enhance Quality

To fortify the quality of content and eliminate redundancy, LinkGraph advocates for the consolidation of similar content. This consolidation enables websites to present a singular, authoritative source on a topic, thereby increasing the perceived expertise and trustworthiness in the eyes of search engines and users alike.

When executed effectively, this approach streamlines the user experience, steering visitors toward the most comprehensive and valuable content, and inviting favorable judgments from Google’s search algorithms. It results in a more organized site architecture that can yield improved search rankings:

- Identify overlapping or duplicate content themes within the website.

- Merge similar content into more substantial, singular articles or pages.

- Redirect any obsolete URLs to the updated consolidated content to maintain link equity.

By recommending the merger of analogous content pieces, LinkGraph’s strategy significantly diminishes the issue of content dilution and positions websites for higher search visibility. It honours the user’s desire for clarity and grants Google a deftly curated source to match the search query, both of which are essential for a potent SEO presence.

Recovering From Google Penalties and Actions

Encountering a Google penalty poses a significant detour on the road to prominent online visibility, causing websites to vanish from the coveted search results.

Navigating the complexities of such a situation demands a definitive course of action, centered on using the Search Console to pinpoint the nature of the penalty.

Preceding the critical steps to recovery, LinkGraph’s seasoned experts initiate a meticulous strategy tailored to address the infringement and construct a compelling reconsideration request.

This systematic approach by LinkGraph serves as a lifeline, restoring websites to Google’s good graces and reinstating their digital presence.

Identify Penalties Using the Search Console

Discerning the root cause of a website’s absence from Google’s search listings necessitates leveraging the Search Console for its penalty identification capabilities. Within the comprehensive service portfolio offered by LinkGraph, experts proficiently navigate the console to detect manual penalties, ensuring transparent communication with clients regarding any issues compromising their site’s visibility.

LinkGraph assists businesses in interpreting notifications from Google Search Console, where penalty alerts are typically issued, to understand the precise nature of the infraction. Addressing these critical setbacks with informed precision is essential for formulating a definitive strategy that reinstates a website’s presence within Google’s index.

Formulate a Recovery Strategy and Submit a Reconsideration Request

Upon determining the nature of a Google penalty via the Search Console, a recovery strategy emerges as a critical next step. LinkGraph’s seasoned professionals undertake the vital process of proactive remediation, meticulously addressing each of the identified issues, and optimizing the website to adhere to Google’s guidelines.

Following the comprehensive rectification efforts, LinkGraph crafts and submits a compelling reconsideration request on behalf of the client. This request, a detailed narrative of corrective actions taken, demonstrates to Google the commitment to upholding its standards, thereby seeking the restoration of the site’s visibility in search results.

Reinforcing Presence With Strategic Keyword Inclusion

In addressing the conundrum of a website’s invisibility in Google’s search results, marketers and site managers often turn their attention toward the potent role of keywords.

Optimal keyword inclusion forms the bedrock of a strategic SEO content strategy, embedding the targeted phrases that echo user queries into the mosaic of on-page elements.

A forensic approach to this practice ensures that each keyword is strategically placed without succumbing to over-optimization, which can inadvertently push a website further into obscurity rather than elevate its presence in search results.

Incorporate Targeted Keywords Into Key on-Page Elements

In the tapestry of search engine optimization, deftly incorporating targeted keywords into on-page elements is tantamount to casting a beacon for Google’s algorithms. LinkGraph’s SEO services ensure that key areas such as the title tag, meta descriptions, and header tags are imbued with strategic keywords, enhancing relevance and steering Google’s attention toward a website’s pages.

Harmony between content and user search patterns is achieved when keywords resonate within the body text without overwhelming the narrative flow. LinkGraph prioritizes this balance to enrich user engagement and search engine recognition, meticulously embedding keywords in a manner that feels natural and promotes content clarity within the scope of search queries.

Balance Keyword Density to Avoid Over-Optimization

LinkGraph’s on-page SEO services expertly navigate the nuanced domain of keyword density, recognizing that the overuse of targeted phrases can be as detrimental as their omission. The team strikes a tactical balance, ensuring keywords enhance rather than undermine the value of content, maintaining a focus that places user experience at the forefront while keeping Google’s ranking algorithms engaged.

In the realm of search engine optimization, nuance is king; an understanding LinkGraph brings to each client project. Their approach abstains from unnatural keyword stuffing, opting for a more measured inclusion that supports content integrity and aligns with the sophisticated criteria search engines use to evaluate and rank web pages.

Explore Less Competitive Keywords for Quicker Visibility

In the intricate dance of search engine rankings, the judicious selection of keywords can become a game-changer for websites striving for visibility.

Businesses that smartly research long-tail keywords pertinent to their niche can bypass the bottleneck of competition, tapping into niches ripe with opportunity.

LinkGraph’s cutting-edge tools aid in the excavation of these lesser-known, yet highly relevant, search terms, uncovering untapped opportunities that could swiftly elevate a site’s visibility on Google’s SERPs.

Research Long-Tail Keywords for Your Niche

In the bustling marketplace of Google’s search engine results, LinkGraph assists businesses by pinpointing long-tail keywords that are intricately aligned with their niche. By focusing on these queries, often less crowded and highly specific, companies can bypass the dense competition and establish a swift foothold on the search engine results pages.

LinkGraph utilizes cutting-edge SearchAtlas SEO software to delve into keyword research, uncovering the granular search terms that resonate with a brand’s unique offerings and customer intent. This strategic approach lays a direct path to driving relevant traffic and cultivating a robust online presence:

- Deploy SearchAtlas SEO software for in-depth keyword analysis.

- Isolate long-tail keywords that promise lower competition yet high relevance.

- Execute content strategies that interweave these terms, securing niche visibility.

Utilize Keyword Tools to Find Untapped Opportunities

LinkGraph harnesses the power of advanced keyword tools to unearth untapped opportunities within Google’s complex search landscape. By employing SEO tools that span beyond simple keyword searches, the team is equipped to reveal niche-specific phrases with promising search volumes yet lower competitive density.

The strategic application of these tools presents an actionable plan for clients aspiring to elevate their online visibility:

- Decipher the search volume and competition level for potential keywords.

- Integrate discovered keywords into the site’s SEO and content strategy.

- Monitor the impact of these keywords on improving search rankings and visibility.

Capitalizing on these opportunities allows for the refinement of SEO practices, pinpointing prospects for quick wins in visibility and delivering measurable growth in organic traffic for LinkGraph’s clients.

Conclusion

In summary, troubleshooting an invisible website in Google’s search results involves a multifaceted approach that harnesses the intricacies of SEO.

Essential to this process is understanding how search engines operate—from discovery to indexing.

Sitemaps play a crucial role in notifying search engines of new content, while addressing common indexing blocks like improper robots.txt configurations and noindex tags is vital for visibility.

Additionally, maintaining a high-quality backlink profile and ensuring content aligns with search intent enhance a website’s authority and relevance.

Tackling duplicate content, recovering from penalties, strategically including keywords, and exploring less competitive long-tail keywords are also critical steps.

Through comprehensive analysis and targeted actions, services such as LinkGraph equip businesses to not only identify the reasons behind their website’s absence from Google’s listings but also implement remedies that ultimately propel their site to greater online prominence.